Continuing on topics from code reviews.

Last year I saw some C# code working very hard to process an application config file like the following:

{

"Storage": {

"Timeout": "25",

"Blobs": [

{

"Name": "Primary",

"Url": "foo.com"

},

{

"Name": "Secondary",

"Url": "bar.com"

}

]

}

}

Fortunately, the Options framework in ASP.NET Core understands how to map this JSON into C#, including the Blobs array. All we need are some plain classes that follow the structure of the JSON.

public class AppConfig

{

public Storage Storage { get; set; }

}

public class Storage

{

public int TimeOut { get; set; }

public BlobSettings[] Blobs { get; set; }

}

public class BlobSettings

{

public string Name { get; set; }

public string Url { get; set; }

}

Then, we setup our IConfiguration for the application.

var config = new ConfigurationBuilder()

.AddJsonFile("appsettings.json")

.Build();

And once you’ve brought in the Microsoft.Extensions.Options package, you can configure the IOptions service and make AppConfig available.

public void ConfigureServices(IServiceCollection services)

{

// ...

services.AddOptions();

services.Configure<AppConfig>(config);

}

With everything in place, you can inject IOptions<AppConfig> anywhere in the application, and the object will have the settings from the configuration file.

Continuing with topics based on ASP.NET Core code reviews.

Here is a bit of code I came across in an application’s Startup class.

public void ConfigureServices(IServiceCollection services)

{

services.AddScoped<IStore<User>, SqlStore<User>>();

services.AddScoped<IStore<Invoice>, SqlStore<Invoice>>();

services.AddScoped<IStore<Payment>, SqlStore<Payment>>();

// ...

}

The actual code ran for many more lines, with the general idea that the application needs an IStore implementation for a number of distinguished objects in the system.

Because ASP.NET Core understands unbound generics, there is only one line of code required.

public void ConfigureServices(IServiceCollection services)

{

services.AddScoped(typeof(IStore<>), typeof(SqlStore<>));

}

Unbound generics are not useful in day to day business programming, but if you are curious how the process works, I did show how to use unbound generics at a low level in my C# Generics course.

One downside to this approach is the fact that you might experience a runtime error (instead of a compile error) if a component requests an implementation of IStore<T> that isn’t possible. For example, if a concrete implementation of IStore<T> uses a generic constraint of class, then the following would happen:

Assert.Throws<ArgumentException>(() =>

{

services.GetRequiredService<IStore<int>>();

});

However, this problem should be avoidable.

This is the first post in a series of posts based on code reviews of systems where ASP.NET Core is involved.

I recently came across code like the following:

public class FaultyMiddleware

{

public FaultyMiddleware(RequestDelegate next)

{

_next = next;

}

public async Task Invoke(HttpContext context)

{

// saving the context so we don't need to pass around as a parameter

this._context = context;

DoSomeWork();

await _next(context);

}

private void DoSomeWork()

{

// code that calls other private methods

}

// ...

HttpContext _context;

RequestDelegate _next;

}

The problem here is a misunderstanding of how middleware components work in the ASP.NET Core pipeline. ASP.NET Core uses a single instance of a middleware component to process multiple requests, so it is best to think of the component as a singleton. Saving state into an instance field is going to create problems when there are concurrent requests working through the pipeline.

If there is so much work to do inside a component that you need multiple private methods, a possible solution is to delegate the work to another class and instantiate the class once per request. Something like the following:

public class RequestProcessor

{

private readonly HttpContext _context;

public RequestProcessor(HttpContext context)

{

_context = context;

}

public void DoSomeWork()

{

// ...

}

}

Now the middleware component has the single responsibility of following the implicit middleware contract so it fits into the ASP.NET Core processing pipeline. Meanwhile, the RequestProcessor, once given a more suitable name, is a class the system can use anytime there is work to do with an HttpContext.

My latest Pluralsight course is alive and covers Azure from a .NET developers perspective. Some of what you’ll learn includes:

- How to create an app service to host your web application and API backend

- How to monitor, manage, debug, and scale an app service

- How to configure and use an Azure SQL database

- How to configure and use a DocumentDB collection

- How to work with storage accounts and blob storage

- How to take advantage of server-less computing with Azure Functions

- How to setup a continuous delivery pipeline into Azure from Visual Studio Team Services

- And much more …

And here is some early feedback from the Twitterverse:

.@OdeToCode Pluralsight course on .Net on Azure is pure gold, and I'm an Azure veteran; don't let the beginner tag fool you! Many tips!

— Ryan Dowling (@ryancei) March 27, 2017

@OdeToCode Scott, your courses on @pluralsight are the best. Nearly finisehd @Azure course.. plz we want more .net & web dev courses :) thx

— mo (@meemo_86) March 22, 2017

Picked up a lot of tricks & tips from @OdeToCode's latest @pluralsight course on #azure: Monitoring, debugging, automation, to name a few!! pic.twitter.com/BtkXnU0Ira

— MJ Alwajeeh (@MJAlwajeeh) March 14, 2017

@OdeToCode @pluralsight midst of watching Developing .NET on Microsoft Azure-getting started & I absolutely love❤ it sir!

— venkatesh vbn (@venkivalleri) March 13, 2017

THANK YOU @DonovanBrown & @OdeToCode my first Azure CI/CD pipeline! Very impressed! #FridayNightDevOps, who said it can't be fun! ️️️ pic.twitter.com/22AZHzvxpC

— James (@james_w_allen) March 18, 2017

Thanks for watching!

The title here is based on a book I remember in my mom’s kitchen: The Joy of Cooking. The cover of her book was worn, while the inside was dog eared and bookmarked with notes. I started reading my mom’s copy when I started working in a restaurant for spending money. In the days before TV channels dedicated to cooking, I learned quite a bit about cooking from this book and on-the-job training. The book is more than a collection of recipes. There is prose and personality inside.

The title here is based on a book I remember in my mom’s kitchen: The Joy of Cooking. The cover of her book was worn, while the inside was dog eared and bookmarked with notes. I started reading my mom’s copy when I started working in a restaurant for spending money. In the days before TV channels dedicated to cooking, I learned quite a bit about cooking from this book and on-the-job training. The book is more than a collection of recipes. There is prose and personality inside.

I have a copy in my kitchen now.

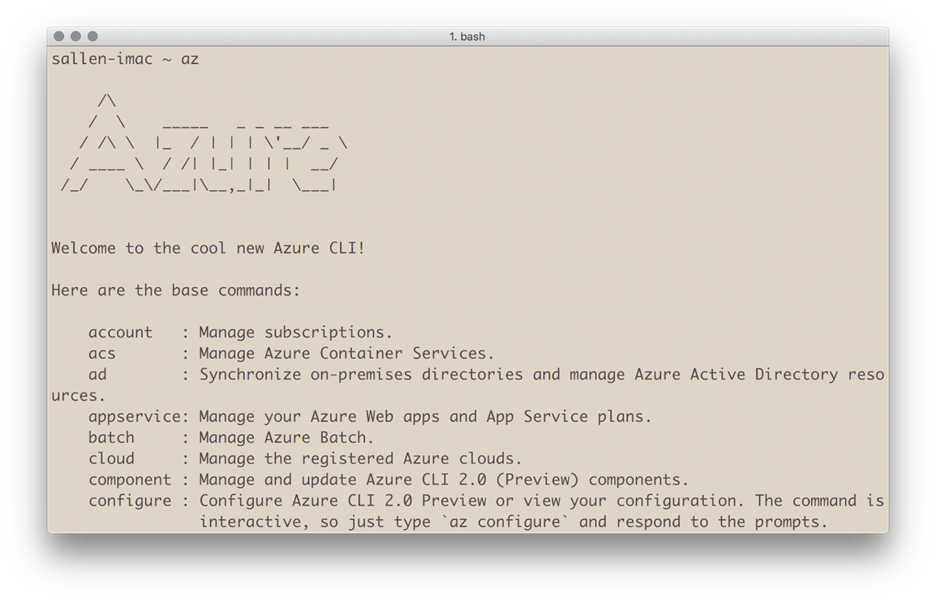

Azure CLI 2

The new Azure CLI 2 is my favorite tool for Azure operations from the command line. The installation is simple and does have a dependency on Python. I look at the Python dependency as a good thing, since Python allows the CLI to work on macOS, WIndows, and Linux. You do not need to know anything about Python to use the CLI, although Python is a fun language to learn and use. I’ve done one course with Python and one day hope to do more.

The operations you can perform with the CLI are easy to find, since the tool organizes operations into hierarchical groups and sub-groups. After installation, just type “az” to see the top-level commands (many of which are not in the picture).

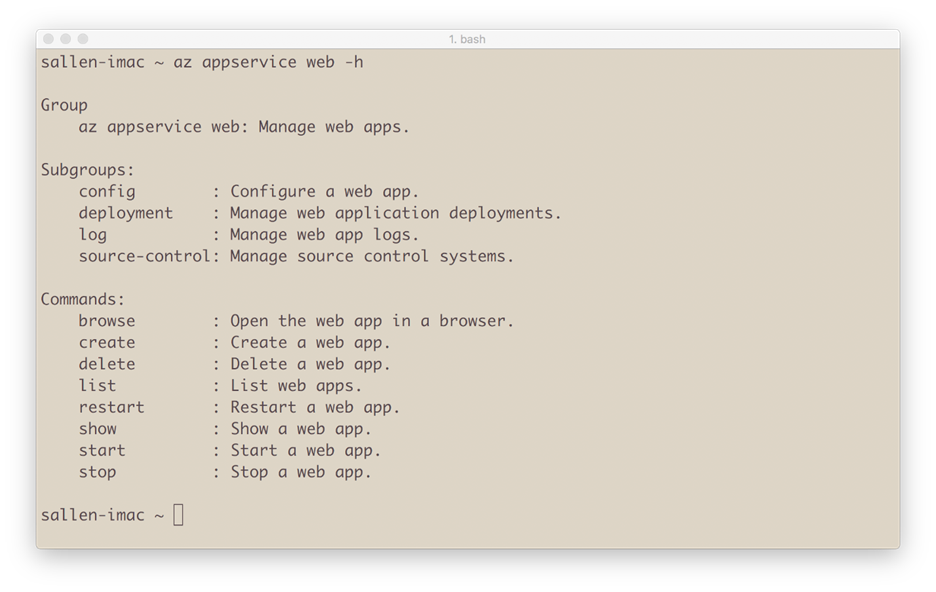

You can use the ubiquitous -h switch to find additional subgroups. For example, here are the commands available for the “az appservice web” group.

For many scenarios, you can use the CLI instead of using the Azure portal. Let’s say you’ve just used a scaffolding tool to create an application with Node or .NET Core, and now you want to create a web site in Azure with the local code. First, we’d place the code into a local git repository.

git init git add . git commit -a -m “first commit”

Now you use a combination of git and az commands to create an app service and push the application to Azure.

az group create --location “Eastus” --name sample-app az appservice plan create --name sample-app-plan --resource-group sample-app --sku FREE az appservice web create --name sample-app --resource-group sample-group --plan sample-app-plan az appservice web source-control config-local-git --name sample-app --resource-group sample-app git remote add azure “https://[url-result-from-previous-operation]” git push azure master

We can then have the CLI launch a browser to view the new application.

az appservice web browse --name sample-app --resource-group sample-app

To shorten the above commands, use -n for the name switch, and -g for the resource group name.

Joyous.

Doing some work where I thought Power BI Embedded would make for a good solution. The visuals are appealing and modern, and for customization there is the ability to use D3.js behind the scenes. I was also encouraged to see support in Azure for hosting Power BI reports. There were a few hiccups along the way, so here are some notes for anyone trying to use Power BI Embedded soon.

The Get started with Microsoft Power BI Embedded document is a natural place to go first. A good document, but there are a few key points that are left unsaid, or at least understated.

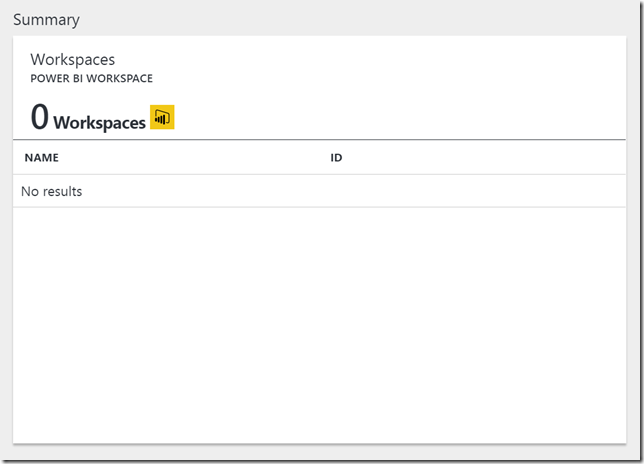

The first few steps of the document outline how to create a Power BI Embedded Workspace Collection. The screen shot at the end of the section shows the collection in the Azure portal with a workspace included in the collection. However, if you follow the same steps you won’t have a workspace in your collection, you’ll have just an empty collection. This behavior is normal, but when combined with some of the other points I’ll make did add to the confusion.

Not mentioned in the portal or the documentation is the fact that the Workspace collection name you provide needs to be unique in the Azure world of collection names. Generally, in the Azure portal, the configuration blades will let you know when a name must be unique (by showing a domain the name will prefix). Power BI Embedded works a bit differently, and when it comes time to invoke APIs with a collection name it will make more sense to think of the name as unique. I’ll caveat this paragraph by saying I am deducing the uniqueness of a collection name based on behavior and API documentation.

After creating a collection you’ll need to create a workspace to host reporting artifacts. There is currently no UI in the portal or PBI desktop tool to create a workspace in Azure, which feels odd. Everything I’ve worked with in the Azure portal has at least a minimal UI for common configuration of a resource, and creating a workspace is a common task.

Currently the only way to create a workspace is to use the HTTP APIs provided by Power BI. For automated software deployments, the API is a must have, but for experimentation it would also be nice to have a more approachable workspace setup to get the feel of how everything works.

There are two sets of APIs to know about. There are the Power BI REST Operations, and the Power BI Resource Provider APIs. You can think of the resource provider APIs as the usual Azure resource provider APIs that would attached to any type of resource in Azure – virtual machines, app services, storage, etc. You can use these APIs to create a new workspace collection instead of using the portal in the UI. You can also achieve common tasks like listing or regenerating the access keys. These APIs require an Azure access token from Azure AD.

The Power BI REST operations allow you to work inside a workspace collection to create workspaces, import reports, and define data sources. There is some orthogonality missing to the API, it appears, as you can use an HTTP POST to create workspaces and reports, use HTTP GET to retrieve resource definitions, but in many cases, there are no HTTP DELETE operations to remove an item. These Power BI operations have a different base URL than the resource manager operations, they use https://api.powerbi.com, and they do not require a token from Azure AD. All you need for authorization is one of the access keys defined by the workspace collection.

The mental model to have here is the same model you would have for Azure Storage or DocumentDB, as two examples. There are the APIs to manage the resource which require an AD token (like to create a storage a account), and then there are the APIs to act as a client of the resource, and these APIs require only an access key (like to upload a blob into storage).

To see how you can work with these APIs, Microsoft provides a sample console mode application on GitHub. After I cloned the repo I had to fix NuGet package references and assembly reference errors. Once I had the solution build, there were still 6 warnings from the C# compiler, which is unfortunate.

If you want to run the application just to create your first workspace, or you want to borrow some code from the application to put in your own, there is one issue that had me stumped for a bit until I stepped through the code with a debugger. Specifically, this line of code:

var tenantId = (await GetTenantIdsAsync(commonToken.AccessToken)).FirstOrDefault();

If you sign into Azure using an account associated with multiple Azure directories, this line of code will only grab the first tenant ID, which might not be the ID you need to access the Power BI workspace collection you’ve created. This happened to me when trying the simplest possible operation in the example program, which is to get a list of all workspace collections, and initially led me to the wrong assumption that every Power BI operation required an AAD access token.

When combined with the other idiosyncrasies listed above, the sample app behavior got me to question if Power BI was ever going to work.

But, like with many technologies, I just needed some some persistence, some encouragement, a bit of luck, and some sleep to allow the all the thought model to sink in.

When creating .NET Core and ASP.NET Core applications, programmers have many options for data storage and retrieval available. You’ll need to choose the option that fits your application’s needs and your team’s development style. In this article, I’ll give you a few thoughts and caveats on data access in the new world of .NET Core.

Remember that an ASP.NET Core application can compile against the .NET Core framework or the full .NET framework. If you choose to use the full .NET framework, you’ll have all the same data access options that you had in the past. These options include low-level programming interfaces like ADO.NET and high-level ORMs like the Entity Framework.

If you want to target .NET Core, you have fewer options available today. However, because .NET Core is still new, we will see more options appear over time.

Bertrand Le Roy recently posted a comprehensive list of Microsoft and third-party .NET Core packages for data access. The list shows NoSQL support for Azure DocumentDB, RavenDB, MongoDB and Redis. For relational databases, you can connect to Microsoft SQL Server, PostgreSQL, MySQL and SQLite. You can choose Npoco, Dapper and the new Entity Framework Core as an ORM frameworks for .NET Core.

Because the Entity Framework is a popular data access tool for .NET development, we will take a closer look at the new version of EF Core.

On the surface, EF Core is like its predecessors, featuring an API with DbContext and DbSet classes. You can query a data source using LINQ operators like Where, Order By and Select.

Under the covers, however, EF Core is significantly different from previous versions of EF. The EF team rewrote the framework and discarded much of the architecture that had been around since version 1 of the project. If you’ve used EF in the past, you might remember there was an ObjectContext hiding behind the DbContext class plus an unnecessarily complex entity data model. The new EF Core is considerably lighter, which brings us to the discussion of pros and cons.

In the EF Core rewrite,you won't find an entity data model or EDMX design tool. The controversial lazy loading feature is not supported for now but is listed on the roadmap. The ability to map stored procedures to entity operations is not in EF Core, but the framework still provides an API for sending raw SQL commands to the database. This feature currently allows you to map only results from raw SQL into known entity types. Personally, I’ve found the ability to consume views from SQL Server to be too restrictive.

With EF Core, you can take a “code first” approach to database development by generating database migrations from class definitions. However, the only tooling to support a “database first” approach to development is a command line scaffolding tool that can generate C# classes from database tables. There are no tools in Visual Studio to reverse engineer a database or update entity definitions to match changes in a database schema. Model visualization is another feature on the future roadmap.

Like EF 6, EF Core supports popular relational databases, including SQL Server, MySQL, SQLite and PostgreSQL, but Oracle is currently not supported in EF Core.

EF Core is a cross-platform framework you can use on Linux, macOS and Windows. The new framework is considerably lighter than frameworks of the past and is also easier to extend and customize thanks to the application of the dependency inversion principle.

EF Core plans to extend the list of supported database providers beyond relational databases. Redis and Azure Table Storage providers are on the roadmap for the future.

One exciting new feature is the new in-memory database provider. The in-memory provider makes unit testing easier and is not intended as a provider you would ever use in production. In a unit test, you can configure EF Core to use the in-memory provider instead of writing mock objects or fake objects around a DbContext class, which can lead to considerably less coding and effort in testing.

EF Core is not a drop-in replacement for EF 6. Don’t’ consider EF Core an upgrade. Existing applications using the DbContext API of EF will have an easier time migrating to EF Core while applications relying on entity data models and ObjectContext APIs will need a rewrite. Fortunately, you can use previous versions of the Entity Framework with ASP.NET Core if you target the full .NET framework instead of .NET Core. Given some of the missing features of EF Core, you’ll need to evaluate the framework in the context of a specific application to make sure the new EF will be the right tool to use.

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen