In previous posts we looked at how to choose an approach for working with the management APIs, and how to setup a service principal name to authenticate an application that invokes the APIs.

In that first post we decided (assuming "we" are .NET developers) that we want to work with the APIs using an SDK instead of building our own HTTP messages using HttpClient. However, even here there are choices in which SDK to use. In this post we will compare and contrast two SDKs, and I’ll offer some tips I’ve learned in figuring out how the SDKs work. Before we dig in, I will say that having the REST API reference readily available is still useful even when working with the higher level SDKs. It is often easier to find the available operations for a given resource and what the available parameters control by looking at the reference directly.

The SDKs for working with the management APIs from C# can be broadly categorized into either generated SDKs, or fluent SDKs. Generated SDKs cover nearly all operations in the management APIs and Microsoft creates these libraries by generating C# code from metadata (OpenAPI specs, formerly known as Swagger). In the other category, human beings craft the fluent version of the libraries to make code readable and operations discoverable, although you won’t find a fluent package for every API area.

In this post we’ll work with the Azure SQL management APIs. Imagine we want to programmatically change the Pricing tier of an Azure SQL instance to scale a database up and down. Scaling up to a higher pricing tier gives the database more DTUs to work with. Scaling down gives the database fewer DTUs, but also is less expensive. If you've worked with Azure SQL, you'll know DTUs are the frustratingly vague measurement of how many resources an Azure SQL instance can utilize to process your workload. More DTUs == more powerful SQL database.

The Azure SQL management SDK is in the Microsoft.Azure.Management.Sql NuGet package, which is still in preview. I prefer this package to the package with the word Windows in the name, as this package is actively updated. The management packages support both .NET Core (netstandard 1.4), and the .NET framework.

The first order of business is to generate a token that will give the app an identity and authorize the app to work with the management APIs. You can obtain the token using raw HTTP calls, or use the Microsoft.IdentityModel.Clients.ActiveDirectory package, also known as ADAL (Active Directory Authentication Library). You’ll need your application’s ID and secret, which are setup in the previous post when registering the app with Azure AD, as well as your tenant ID, also known as the directory ID, which is the ID of your Azure AD instance. By the way, have you noticed the recurring theme in these post of having two names for every important object?

Take the above ingredients and cook them in an AuthenticationContext to produce an bearer token:

public async Task<TokenCredentials> MakeTokenCredentials()

{

var appId = "798dccc9-....-....-....-............";

var appSecret = "8a9mSPas....................................=";

var tenantId = "11be8607-....-....-....-............";

var authority = $"https://login.windows.net/{tenantId}";

var resource = ""https://management.azure.com/";

var authContext = new AuthenticationContext(authority);

var credential = new ClientCredential(appId, appSecret);

var authResult = await authContext.AcquireTokenAsync(resource, credential);

return new TokenCredentials(authResult.AccessToken, "Bearer");

}

In the above example, I’ve hard coded all the pieces of information to make the code easy to read, but you’ll certainly make a parameter object for flexibility. Note the authority will be login.windows.net for the Azure global cloud, plus your tenantId, although I believe you can also use your friendly Azure AD domain name here also. The resource parameter for AcquireTokenAsync will always be management.azure.com, unless you are in one of the special Azure clouds.

With credentials in hand, the gateway to the SQL management APIs is a SqlManagementClient class. Management classes are consistently named across the various SDKs for the different APIs. For example, to manage App Services there is a WebSiteManagementClient in the App Service NuGet. All these service client classes build on HttpClient and provide some extensibility points. For example, the manager constructors all allow you to pass in a DelegatingHandler which you can use to inspect or modify HTTP request and response messages as they work their way through the underlying HttpClient pipeline.

Here’s a class that demonstrates how to use the SqlManagementClient to move an Azure SQL Instance into the cheapest standard plan with the fewest DTUs. This plan is the "S0" plan.

public class RestApproach

{

private SqlManagementClient client;

public RestApproach(TokenCredentials credentials, string subscriptionId)

{

client = new SqlManagementClient(credentials, new LoggingHandler());

client.SubscriptionId = subscriptionId;

}

public async Task SetDtus()

{

var resourceGroupName = "rgname";

var serverName = "server";

var databaseName = "targetdatabase";

var database = await client.Databases.GetAsync(resourceGroupName, serverName, databaseName);

var updateModel = new DatabaseUpdate(requestedServiceObjectiveName: "S0");

var result = await client.Databases.UpdateAsync(resourceGroupName, serverName, databaseName, updateModel);

Console.WriteLine($"Database {database.Name} is {database.ServiceLevelObjective}");

Console.WriteLine($"Updating {database.Name} to S0");

}

}

A couple notes on the code.

First, all management APIs are grouped into properties on the management class. Use client.Databases for database operations, and client.Server for server operations, and so on. This might feel odd at first.

Secondly, we have to face the terminology beast yet again. What we might think of as pricing tiers or DTU settings in the portal will be referred to as “service level objectives”. If you do any work with the Azure resource manager or resource templates, I’m sure you’ve already experienced the mapping of engineering terms to UI terms.

Thirdly, even though the database update model has a ServiceLevelObjective property, to change a service level you need to use the RequestedServiceObjectName property on the update model. This is one of those scenarios where reading the REST API documentation can help, because the properties will map to the parameters you see in the docs by name, and the docs are clear about what each parameter can do.

Fourthly, some operations, like setting the service level of a SQL database, require specific string values like “S0”. There is always an API you can use to retrieve the legal values that takes into account your location. For service levels, you can also use the CLI to see a list.

λ az sql db list-editions --location "EastUS"

--query "[].supportedServiceLevelObjectives[].name"

[

"Basic",

"S0",

"S1",

...

...

...

"DW30000c",

While the generated SDK packages will give you some friction until your mental model adjusts, they are an effective approach to using the management SDKs. There is no need to use HttpClient directly, but if you need the flexibility, the HttpClient instance is available from the manager class.

The fluent version of the SQL management SDK is in the Microsoft.Azure.Management.Sql.Fluent package. You can take any management package and add the word “Fluent” on the end to see if there is a fluent alternative. You’ll also want to reference Microsoft.Azure.Management.ResourceManager.Fluent for writing easier authentication code.

The first step again is to put together some credentials:

public AzureCredentials MakeAzureCredentials(string subscriptionId)

{

var appId = "798dccc9-....-....-....-............";

var appSecret = "8a9mSPas....................................=";

var tenantId = "11be8607-....-....-....-............";

var environment = AzureEnvironment.AzureGlobalCloud;

var credentials = new AzureCredentialsFactory()

.FromServicePrincipal(appId, appSecret, tenantId, environment);

return credentials;

}

Notice the fluent API requires a bit less code. The API is smart enough to determine the login endpoints and management endpoints based on the selected AzureEnvironment (there’s the global cloud, but also the specialized clouds like the German cloud, U.S. Federal cloud, etc).

Now, here is the fluent version of setting the service level to compare with the previous code.

public class FluentApproach

{

private ISqlManager manager;

public FluentApproach(AzureCredentials credentials, string subscriptionId)

{

manager = SqlManager.Authenticate(credentials, subscriptionId);

}

public async Task SetDtus()

{

var resourceGroupName = "rgname";

var serverName = "servername";

var databaseName = "databasename"; // case senitive

var database =

(await

manager.SqlServers

.GetByResourceGroupAsync(resourceGroupName, serverName))

.Databases.Get(databaseName);

await database.Update()

.WithServiceObjective("S2")

.ApplyAsync();

Console.WriteLine($"Database {database.Name} was {database.ServiceLevelObjective}");

Console.WriteLine($"Updating {database.Name} to S2");

}

}

The fluent API uses a SqlManager class. Instead of grouping all operations on the manager, you can now think in the same hierarchy as the resources you manage. Instead of figuring out which properties to set on an update model, the fluent API allows for method chains that build up a data structure. As an aside, I still haven’t found an aesthetic approach to formatting chained methods with the await keyword, so it is tempting to use the synchronous methods. However, I still prefer the fluent API to the code-genreated API as the code is easier to read and write.

You won’t find many examples of using the management APIs on the web, but the APIs can be an incredibly useful tool for automation. ARM templates are arguably a better approach for provisioning and updating resources, and CLI tools are certainly a better approach for interactions up to a medium amount of complexity. But, for services that combine resource management with logic and hueristics, the APIs via an SDK is the best combination.

In a previous post, I wrote about choosing an approach to work with the Azure Management APIs (the REST APIs, as they call them).

Before you can make calls to the API from a program, you’ll want to create a service account in Azure for authentication and authorization. Yes, you could authenticate using your own identity, but there are a few good reason not to use your own identity. For starters, the management APIs are generally invoked from a non-interactive environment. Also, you can give your service the least set of privileges necessary for the job to help avoid accidents and acts of malevolence.

This post details the steps you need to take, and tries to clear up some of the confusion I’ve encountered in this space.

The various terms you’ll encounter in using the management APIs are a source of confusion. The important words you’ll see in documentation are different from the words you’ll see in the UI of the portal, which can be different from what you’ll read in a friendly blog post. Even the same piece of writing or speaking can transition between two different terms for the same object, because there are varying perspectives on the same abstract concept. For example, an “application” can morph into a “service principal name” after just a few paragraphs. I’ll try not to add to the confusion and in a few cases try to explain why we haven’t different terms, but I fear this is not entirely possible.

To understand the relationship between an application and a service principal, the "Application and service principle objects in Azure Active Directory" article is a good overview. In a nutshell, when you register an application in Azure AD, you also create a service principal. An application will only have a single registration in a single AD tenant. Service principals can exist for the single application in multiple tenants, and it is a service principal that represents the identity of an application when accessing resources in Azure. If this paragraph makes any sense (and yes, it can take some time to internalize), then you'll begin to see why it is easy to interchange the terms "application" and "service principal" in some scenarios.

There are three basic steps to follow when setting up the service account (a.k.a application, a.k.a service principal name).

1. Create an application in the Azure Active Directory containing the subscription you want the program to interact with.

2. Create a password for the application (unlike a user, a service can have multiple passwords, which are also referred to as keys).

3. Assign role-based access control to the resources, resource groups, or subscriptions your service needs to interact with.

If you want to work through this setup using the portal, there is a good piece of Microsoft documentation with a sentence case title here : “Use portal to create an Azure Active Directory application and service principal that can access resources”.

Even if you use other tools to setup the service account, you might occasionally come to the portal and try to see what is happening. Here are a couple hiccups I’ve seen encountered in the portal.

First, if you navigate in the Azure portal to Azure Active Directory –> App registrations, you would probably expect to see the service you’ve registered. This used to be the case, I believe, but I’m also certain that even applications that I personally register do not appear in the list of app registrations until I select the “All apps” option on this page.

And yes, the portal will list your service as a “Web app / API” type, even if your application is a console application. This is normal behavior. You don’t want to register your service as a “native application”, no matter how tempting that may be.

The confusing terminology here exists, I believe, because the terms the portal is using are mapping to categories from the OAuth and OpenID parlance. “Web apps / API” types are confidential clients that we can trust to keep a secret. A native app is a public client that is not trustworthy. What I’m building is a piece of headless software that runs on a server, thus I’m building a confidential client and can register the client as a “Web apps / API” type, even though the app will never listen for socket connections.

Another bit of confusion in the portal exists when you assign roles to your service. Most documentation will refer to "role-based access control”, although in the portal you are looking for the IAM blade (which I assume stands for “identity and access management”). When you go to add a new role, don’t expect the service account to appear in the list of available identities. You’ll need to search for the service name, starting with the first letters, and then select the service.

Instead of clicking around in the portal, you can also use APIs to setup your API access, or a command line tool like the Azure CLI. After logging, in and setting your subscription, use the ad sp group of commands to work with service principals in AD.

λ az ad sp create-for-rbac -n MyTestSpn2 -p somepassword

Retrying role assignment creation: 1/36

Retrying role assignment creation: 2/36

{

"appId": "d9fac83c-...-...-a29a-6f7709a0a69e",

"displayName": "MyTestSpn2",

"name": "http://MyTestSpn2",

"password": "...",

"tenant": "11be8607-....-....-....-............"

}

The ad sp create-for-rbac command will place the new service principal into the Contributor role on the current subscription. For many services, these defaults will not give you a least privileged account. Use the --role argument to change the role. Use the --scopes argument to apply the role at a more granular level than subscription. The scope is the resourceId you can find on any properties blade in the portal. For example, --scopes /subscriptions/5541772b-....-....-....../resourceGroups/myresourcegroup.

With a service account in place we can finally move forward and write some code to work with the management APIs. Stayed tuned for this exciting code in the next post.

Now available: Working with Azure Management REST APIs

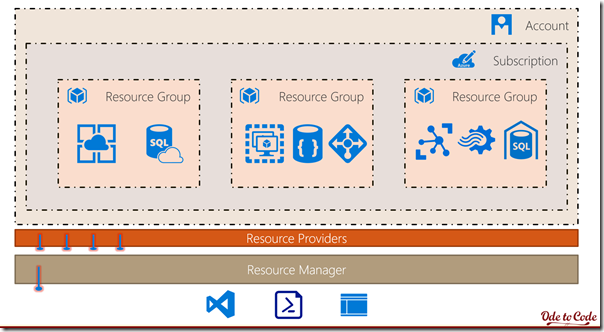

The Azure REST APIs allow us to interact with nearly every type of resource in Azure programmatically. We can create virtual machines, restart a web application, and copy an Azure SQL database using HTTP requests. There's a few choices to make when deciding how to interact with these resource manager APIs, and some potential areas of confusion. In this post and future posts I hope to provide some guidance on how to work with the APIs effectively and avoid some uncertainties.

The Azure REST APIs allow us to interact with nearly every type of resource in Azure programmatically. We can create virtual machines, restart a web application, and copy an Azure SQL database using HTTP requests. There's a few choices to make when deciding how to interact with these resource manager APIs, and some potential areas of confusion. In this post and future posts I hope to provide some guidance on how to work with the APIs effectively and avoid some uncertainties.

If you can send HTTP messages, you can interact with the resource manager APIs at a low level. The Azure REST API Reference includes a list of all possible operations categorized by resource. For example, backing up a web site. Each endpoint gives you a URI, the available HTTP methods (GET, PUT, POST, DELETE, PATCH), and a sample request and response. All these HTTP calls need to be authenticated and authorized, a topic for a future post, but the home page describes how to send the correct headers for any request.

These low levels APIs are documented and available to use, but generally you want to write scripts and programs using a slightly higher level of abstraction and only know about the underlying API for reference and debugging.

Fortunately, specifications for all resource manager APIs are available in OpenAPI / Swagger format. You can find these specifications in the azure-rest-api-specs GItHub repository. With a codified spec in hand, we can generate wrappers for the API. Microsoft has already generated wrappers for us in several different languages.

Microsoft provides Azure management libraries that wrap these underlying APIs for a number of popular languages. You can find links on the Microsoft Azure SDKs page. When looking for a management SDK, be sure to select a management SDK instead of a service SDK. A blob storage management SDK is an SDK for creating and configuring a storage account, whereas the service SDK is for reading and writing blobs inside the storage account. A management SDK generally has the name "management" or "arm" in the name (where arm stands for Azure Resource Manager), but the library names are not consistent across different languages. Instead, the names match the conventions for the ecosystem, and Node packages follow a different style than .NET and Java. As an example, the service SDK for storage in Node is azure-storage-node, whereas the management package is azure-arm-storage.

In addition to SDKs, there are command line utilities for managing Azure. PowerShell is one option. In my experience, PowerShell provides the most complete coverage of the management APIs, and over the years I've seen a few operations that you cannot perform in the Azure portal, but can perform with PowerShell.

However, my favorite command line tool is the cross-platform Azure CLI. Not being a regular user of PowerShell, I find the CLI easier to work with and the available commands are easier to discover. That being said, Azure CLI doesn't cover all of Azure, although new features arrive on a regular cadence.

In general, stick with the command line tools if you have quick, simple scripts to run. Some applications, however, require more algorithms, logic, heuristics, and cooperation with other services. For these scenarios, I'd prefer to work with an SDK in a programming language like C#.

Speaking of which ...

If you are a C# developer who wants to manage Azure using C# code, you have the option of going with raw HTTP messages using a class like HttpClient, or using the SDK. Use the SDK. There is enough flexibility in the SDKs to do everything you need, and you don't need to build your own encapsulation of the APIs.

You do need to choose the correct version of the SDKs. If you search the web for examples of managing Azure from C# code, you'll run across NuGet packages with the name Microsoft.WindowsAzure.Management.*. Do not use these packages, they are obsolete. Make sure you are using packages that start with Microsoft.Azure.Management.* (no Windows in the name).

One caveat to these packages is that the classes inside are auto-generated from the OpenAPI specs, so they tend to feel quirky and discoverability can be difficult. I've found using a good code navigation tool like dotPeek allows me to find the model that I want (model classes represent the data returned by a given API endpoint, i.e. a resource in REST terms), and then use "Find References" to see the operations that act on the model resource.

For C#, It's often easier to work with the Fluent .NET management APIs. These fluent management APIs build on top of the underlying management package, but have extension methods tailored for discoverability and readability. For example, Microsoft.Azure.Management.Sql allows you to use the management API from generated .NET code. The Microsoft.Azure.Management.Sql.Fluent package adds discoverability and readability to the code. Note that not all management packages have a fluent counterpart, however, and not all operations might be exposed from the fluent interface.

This post covers some of the options and decision points for working with the Azure Resource Manager API. In future posts we'll see how to write C# code for interacting with the base API and the fluent API. First, however, we'll have to setup a service principal so our code can be authenticated and authorized to execute against our resources.

Also see: Setting Up Service Principals to Use the Azure Management APIs and Working with Azure Management REST APIs

Recorded many months ago in my previous life, this new course shows how to deploy ASP.NET Core into Azure using a few different techniques. We'll start by using Git, then progress to using a build and release pipeline in Visual Studio Team Services. We'll also demonstrate how to use Docker and containers for deployment, and how to use Azure Resource Manager Templates to automate the provisioning and updates of all Azure resources.

Lars has a blog post with a behind the scenes look, and you'll find the new course on Pluralsight.com.

Microsoft's collection of open source command line tools built on Python continues to expand. Let's take the scenario where I need to execute a query against an Azure SQL database. The first step is poking a hole in the firewall for my current IP address. I'll use the Azure CLI 2.0:

λ az login

To sign in, use a web browser to open the page https://aka.ms/devicelogin

and enter the code DRAMCY103 to authenticate.

[

{

...subscription 1 ...

},

{

... subscription 2 ...

}

]

For the firewall settings, az has firewall-rule create command:

λ az sql server firewall-rule create -g resourcegroupname

-s mydbserver -n watercressip --start-ip-address 173.169.164.144

--end-ip-address 173.169.164.144

{

"endIpAddress": "173.169.164.144",

...

"type": "Microsoft.Sql/servers/firewallRules"

}

Now I can launch the mssql-cli tool.

λ mssql-cli -S mydbserver.database.windows.net

-U appusername -d appdbname

Auto-complete for columns works well when you have a FROM clause in place (maybe LINQ had it right after all).

If I'm in transient mode, I'll clean up and remove the firewall rule.

λ az sql server firewall-rule delete -g resourcegroupname -s mydbserver -n watercressip

The mssql-cli has a roadmap, and I'm looking forward to future improvements.

In any method returning a Task, it is desirable to avoid using Task.Run if you can compute a result without going async. For example, if logic allows you to short-circuit a computation, or if you have a fake method in a test returning a pre-computed answer, then you don't need to use Task.Run.

Example:

Here is a method that is not going to do any work, but needs to return a task to fulfill an interface contract for a test:

public Task ComputationWithSideEffects(string someId)

{

return Task.Run(() => {

// i'm just here to simulate hard work

});

}

Instead of returning the result of Task.Run, there are two helpers on the Task class that make the code more readable and require a bit less runtime overhead.

For the above scenario, I'd rather use Task.CompletedTask:

public Task ComputationWithSideEffects(string someId)

{

return Task.CompletedTask;

}

What if the caller expects to receive a result from the task? In other words, what if you return a Task<T>? In this scenario, if you already have an answer, use Task.FromResult to wrap the answer.

public Task<Patience> FindPatience()

{

if(cachedPatience != null)

{

return Task.FromResult(cachedPatience);

}

else

{

return ImAlreadyGone();

}

}

Last night, I began to wonder about my virtual neighbors here at OdeToCode.

You see, when you provision an Azure App Service, Azure will give your service a public IP address. The IP address will stay with the App Service into the future, as long as you don't delete the App Service.

The IP address is a virtual address shared by many App Services that run on the same "stamp" in a region (where a stamp is essentially a cluster of servers). If, for some reason, you don't want a public address or don't want to share an address, another approach is to setup an isolated App Service, but isolation is pricey. For most of us, using the shared, virtual, and public IP is fine, as we can use custom domains and SSL certificates, and everything just works as expected.

But, back to last night.

What if I could wander around my stamp like a character in a Gibson novel? Who would I see? Do I share an IP address with a celebrity website? Do I live in a criminalized neighborhood where bewildered netizens show up after being click-jacked? Do I have any neighbors who would lend me 2 eggs, and a cup of all-purpose flour if in a pinch?

First step, finding the IP address for OdeToCode.

λ nslookup odetocode.com Server: UnKnown Address: 192.168.200.1 Non-authoritative answer: Name: odetocode.com Address: 168.62.48.183

Taking this IP address to hostingcompass.com, I can see there are 84 known web sites hosted on the IP address (and this wouldn't include sites fronted by a proxy, like Cloudflare, or without a custom domain, I think).

What amazing is not just how many websites I recognized, but how many websites are run by people I personally know. For example:

https://www.alvinashcraft.com/

The neighborhood also includes an inordinate number of bars and restaurants, as well as a photographer and investment advisor. Cheers!

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen