After considering the three rules and creating a baseline, an entire team can work with a database whose definition lives safely in a source control repository. The day will come, however, when the team needs to change the schema. Each change creates a new version of the database. In my plan, the baseline scripts created a schema change log to track these changes.

By "change", I mean a change to a table, index, key, constraint, or any other object that requires DDL, with the exception of views, stored procedures, and functions. I treat those objects differently and we'll cover those in the next post. I also include any changes to static data and bootstrap data in change scripts.

Alternatives

Before jumping into an example, I just wanted to point out there are many ways to manage database changes and migrations. Phil Haack describes his idempotent scripts in Bulletproof Sql Change Scripts Using INFORMATION_VIEWS. Elliot Smith and Rob Nichols are database agnostic with Ruby Migrations. If you know of other good articles, please post links in the comments. Again, the goal is to manage change in the simplest manner possible for your project.

Example, Please

The team just baselined their database that includes a Customers table, but now wants to add a new column to store a Customer's shoe size. They also realized their OrderState table, a lookup table that include the values 'Open', and 'Shipped', now needs a new value of 'Canceled'. They would need to create a schema change script that looks like the following. Notice I can include as many changes as needed into a single script.

File: sc.01.00.0001.sql

Whoever writes this change script will test it thoroughly and against a variety of test data, then commit the change script into source control. The schema change is officially published. The schema change will start to appear in developer workspaces as they update from source control, and on test machines as new builds are pushed into QA and beyond.

It's good to automate database updates as ruthlessly as possible. Ideally, a developer, tester, or installer can run a tool that looks at the schema version of the database in the SchemaChangeLog table, and compares that version to the available updates it finds in the form of schema change scripts that reside on the file system. Sorting files by name will suffice using the techniques explained here. Command line tools work the best, because you can use them in build scripts, developer machines, and install packages. You can always wrap the tool in a GUI to make it easy to use. Here's the output I recently saw from a schema update tool (some entries removed for brevity):

Connected to server .

Connected to database xyz

Host: SALLEN2 User: xyz\sallen

Microsoft SQL Server 2005 - 9.00.3054.00 (Intel X86)

Schema history:

05.00.0000 initial-install on Dec 13 2007 11:26AM

05.00.0001 sc.05.00.0001.sql on Dec 13 2007 11:26AM

05.00.0002 sc.05.00.0002.sql on Dec 13 2007 11:26AM

...

05.00.0012 sc.05.00.0012.sql on Dec 13 2007 11:26AM

05.01.0000 sc.05.01.0000.sql on Dec 13 2007 11:26AM

05.01.0001 sc.05.01.0001.sql on Dec 13 2007 11:26AM

...

05.01.0019 sc.05.01.0019.sql on Dec 13 2007 11:26AM

Current version:

05.01.0019

The following updates are available:

sc.05.01.0020.sql

sc.05.01.0021.sql

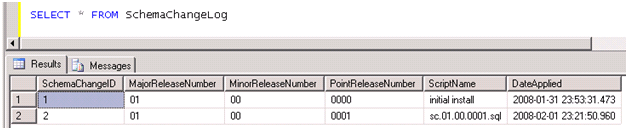

Once a developer runs the schema change in this post, they should see the following in the schema change log:

Schema Change Rules and Tips

Once a script is published into source control, it cannot be changed! Once someone updates their database with an update script, they should never have to run the same script on that same database.

The responsibility of updating the SchemaChangeLog table should reside with the tool that applies the updates. This way, the tool can ensure the script runs to completion before the recording the update into the change log.

Always backup a production database before applying a change script. If a change script happens to fail with an error, you can at least get the database back into a known state.

Some people will wonder what happens if multiple developers are all working on change scripts at the same time. Fortunately, the source control repository is a great mediator. I can't add an update script with the same name as your update script – I'll have to rev my version number if you beat me to the commit by a few seconds.

Of course, changes are not just about schema changes. You also have to write the code to migrate data. For instance, if for some reason we moved the ShoeSize column from the Customers table to the CustomerDetails table, the update script will also need to move and preserve the data. Data manipulation is often the trickiest part of change scripts and where they need the most testing.

Summary

Managing change with your database yields a great many benefits. Since the schema change scripts are in source control, you can recreate your database as it looked at any point in time. Is a customer reporting a bug on build 3.1.5.6723? Pull the source code tagged or labeled with that version number and run the baseline, then all schema change scripts included in the tag. You now have the same database and have a much better chance to recreate the bug. Also, changes move from development to test, and ultimately into production in a consistent, orderly, and reproducible manner.

I've skipped over quite a few nitty-gritty details, but I hope you get the general idea. I've used this approach for years and its worked well. Still, I feel the approach is a bit long in the tooth and I'm looking for ways to improve. Feedback is appreciated. What do you think?

Coming up next – managing views and stored procedures.

Continuing from the last post (Three Rules for Database Work), I wanted to drill into some database versioning strategies that have worked well for me.

Caveats and Considerations

As a preface, let me say there are many different strategies that can work. I'm not presenting the one true way. My goal is to roll out database changes in a consistent, testable, reproducible manner. I'm used to working with largish databases that are packaged to install behind a customer's firewall. I don't want to use naïve update scripts that run for 6 hours. Concerns like this bias my thinking and strategies. Every application should manage database change, but you'll have to decide on the simplest strategy that works for your environment.

The Baseline

The first step in versioning a database is to generate a baseline schema. This is the starting point for versioning a database. After you've published the baseline to an authoritative source, any changes to the schema require a schema change script (a topic for the next post, because I have a feeling this is going to be long for a blog post).

You are probably not going to baseline the database on day 1 of a project. I'd suggest letting the early design of the schema settle in a bit so you are not creating a huge number of change scripts. This might sound like I'm suggesting you need to do some big, up front schema design – but that's not true. You can get pretty far into an application these days with in-memory data, fakes, stubs, mocks, and unit tests. Once the model in your code starts to stabilize, you can start thinking of the schema required to persist all the data. If you are ORMing, you can even generate the first schema from your model.

On the other hand, maybe your project and database have already been around a couple years. That's ok – you can baseline today (tomorrow at the latest), and manage change moving forward.

But How?

If you want to do things the hard way, then open a new file in a text editor and write all the SQL commands that will create every table, constraint, function, view, index, and every other object in your database. You'll also want to include commands that populate lookup tables with static data and include any bootstrap data needed by the application. Test the new script against a fresh database server and if successful, commit the file to source control. Consider your schema baselined!

Nobody really does this step the hard way, though. Most of us use tools that we point to a database, and the tools generate one or more scripts for us. Some people like to generate everything into one big script file. Others like to generate one script file for every database object. SQL Server Management Studio provides both option when you elect to script a database. I've seen both approaches work, but the "one file per object" approach feels cumbersome on a day to day basis, and unwieldy if the number of objects grow into the thousands.

Personally, I like to take a hybrid approach. I like to keep all of the SQL needed to create tables, constraints, defaults, and primary indexes in a single file. Any views, stored procedures, and functions are scripted one per file.

If you go the multiple file approach, make sure to write a batch file, shell script, application, or some other form of automation that can automatically locate and run all of the script files required to install the database. Human intervention in this process is a step backwards.

Also, many tools like to include CREATE DATABASE commands and/or include database names in the scripts they generate. You'll want to purge any reference to a hardcoded database name. You want the name to be configurable (a default name is fine), and you probably want to support multiple databases for your application on the same database instance (mostly for testing).

Whichever approach you take (one single file, or multiple files), you now have scripts that can recreate the database schema on any developer machine, test machine, or production machine. Everyone uses the exact same schema. Congratulations! You've just increased the quality of your software because the database can be reliably reproduced in any environment.

I Almost Forgot the Most Important Ingredient

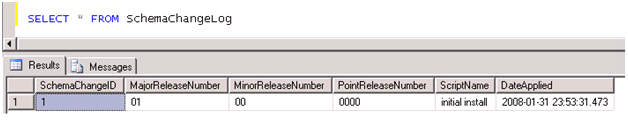

At some point in the future, the schema will have to change. Before you baseline the database you need to add a table to record these schema changes. The following table is the kind of table I'd use to track every change to a database.

The first baseline schema script should, as the last step, officially install version 1.0 of the database:

Some developers love working with relational databases, and other developers can't stand to touch them. Either way - if your application uses a database, you have to treat the database with some respect. The database is as much a part of an application as the code and the models inside the software.

Here are three rules I've learned to live by over the years of working with relational databases.

1. Never use a shared database server for development work.

The convenience of a shared database is tempting. All developers point their workstations to a single database server where they can test and make schema changes. The shared server functions as an authoritative source for the database schema, and schema changes appear immediately to all team members. The shared database also serves as a central repository for test data.

The convenience of a shared database is tempting. All developers point their workstations to a single database server where they can test and make schema changes. The shared server functions as an authoritative source for the database schema, and schema changes appear immediately to all team members. The shared database also serves as a central repository for test data.

Like many conveniences in software development, a shared database is a tar pit waiting to fossilize a project. Developers overwrite each other's changes. The changes I make on the server break the code on your development machine. Remote development is slow and difficult.

Avoid using a shared database at all costs, as they ultimately waste time and help produce bugs.

2. Always Have a Single, Authoritative Source For Your Schema

Ideally, this single source will be your source control repository (see rule #3). Consider the following conversation:

Developer 1: It's time to push the app into testing. Do we copy the database from Jack's machine, or Jill's machine?

Developer 2: Ummmmmmmm, I don't remember which one is up to date.

Developer 1: We're screwed.

Everyone should know where the official schema resides, and have a frictionless experience in getting a fresh database setup. I should be able to walk up to a computer, get the latest from source control, build, and run a simple tool to setup the database (in many scenarios, the build process can even setup a database if none exists, so the process is one step shorter).

How you put your database into source control depends on your situation and preferences. Any decent O/R mapping tool should be able to create a database given the mappings you've defined in a project. You can also script out the database as a set of one or more files full of SQL DDL commands. I generally prefer to keep database views and programmatic features (including functions, triggers, and stored procedures) as separate files - but more on this in a later post.

There are plenty of tools to help. Leon Bambrick has a long list (albeit one year old list) of tools and articles that can help, while Jeff Atwood gushes over the virtues of Visual Studio for Database Professionals.

3. Always Version Your Database

There are many ways to version databases, but the common goal is to propagate changes from development, to test, and ultimately to production in a controlled and consistent manner. A second goal is to have the ability to recreate a database at any point in time. This second goal is particularly important if you are shipping software to clients. If someone finds a bug in build 20070612.1 of your application, you must be able to recreate the application as it appeared in that build - database and all.

In a future post, I'll describe an approach I've used for database versioning that has worked well for many years of commercial development.

In the meantime, if you are looking for more database rule, then Adam Cogan and SSW maintain an excellent list.

Recently, I received a package from my local power company - Alleghany Energy. The package contained two 13-watt carbon fluorescent lamps (CFLs). In the Unites States, various organizations are trying to encourage people to replace their incandescent light bulbs with CFLs to save energy. In fact, recent U.S. legislation will effectively prevent the sale of incandescent bulbs after the year 2014 (unless the bulbs somehow become a lot more efficient by then).

Since the package didn't come with an invoice inside, I assumed my power company was giving away free bulbs. After all, Home Depot gave away 1 million bulbs during last year's Earth Day, so why shouldn't my utility company give away a few, too?

I was wrong.

It was quite a shock to Alleghany's customers when we found out we were being charged almost $12 for these two bulbs as part of the "Maryland Compact Fluorescent Light Energy Efficiency Program".

Outrage ensued. The outrage wasn't so much over the cost of the bulbs as it was about unmet expectations. We expected free bulbs because we weren't told about the cost. Alleghany Energy was either being sneaky, or was incompetent at communicating with their customers. If the power company was upfront about their plans, the story might have a different ending. Instead, there were rumblings about class action lawsuits and legal charges for unsolicited merchandise.

In the end, the power company decided to refund all charges and eat the 2.5 million dollars it cost to send light bulbs to 220,000 customers.

Moral of the story – communicate with the customer. This is why contemporary software development processes like Agile and XP have such a focus on customer communication. Unmet expectations make for angry customers and bad business.

Tafiti is a search visualization application built with Silverlight 1.0 and the Windows Live APIs. The Tafiti code is hosted on CodePlex under the Microsoft Public License (MS-PL), as announced by Marc Mercuri last month.

What is amazing about Tafiti is the number of controls written in 3700 lines of JavaScript. Tafiti includes custom Button, Scrollbar, Hyperlink, TextEdit, StackPanel, FlowPanel, and Carousel controls. You can find the code inside the js folder of the Tafiti web project, inside a file by the name of controls.js.

Tafiti highlights some of the pain inherent in Silverlight 1.0 development, too. Most notably the pain felt from a total absence of any infrastructure needed to build these reusable controls. Tafiti provides this infrastructure for inself. For example, a generate UniqueNames method:

If Silverlight 1.0 did not have such a short self-life, it would be worthwhile to genericze the Tafiti controls into a JavaScript library, but when Silverlight 2.0 hits the world this year I wouldn't be surprised to see those 3700 lines of code replaced with 370 lines of code. Silverlight 2.0 will take all this pain away...

I did a couple user group presentations this month on the new ASP.NET MVC framework. This post is a follow-up with all the links I promised to publish.

My first presentation of the year went to the Central Maryland Association of .NET Professionals in Columbia, MD. The turnout at the meeting was tremendous – I'd say well over 100 people were in attendance. It didn't hurt that G Andrew Duthie was giving away free copies of Visual Studio 2008 as part of the Microsoft Installfest, and the catered food was a lot better than the typical pizza-in-cardboard user group fare.

Best of all, I walked out of the meeting with a new 8 GB Zune 2 (thanks to a winning raffle ticket). Many people tried to tell me that it's not fair for the speaker to win the grand prize raffle, but I pretended not to hear them and then slipped out quietly before the mob turned ugly.

My second presentation went to the BaltoMSDN group in Hunt Valley, MD. Imagine going to a wine tasting and having a technical presentation break out. That's what BaltoMSDN is like, because they have their meetings in a private room at the Greystone Grill. Bacon wrapped sea scallops and Belgian beer on draft go well with code, I must say.

The Links

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen