A common question I’ve been getting is how to use tokens with ASP.NET, specifically JSON Web Tokens (JWT) with ASP.NET WebAPI where the OAuth server and the resource server are the same. In other words, you have a single web site that wants to both issue tokens to authenticated clients and verify the same tokens on incoming requests.

To pull this off with Microsoft’s OWIN based components you’ll need the Microsoft.Owin.Security.Jwt package from NuGet, which brings in a few dependencies, including System.IdentityModel.Tokens.Jwt.

The app building setup code is simple (it is the details that are a bit trickier). We need:

OWIN startup code can register both of these middleware pieces using app builder extension methods.

[assembly: OwinStartup(typeof(OwinStartup))]

namespace NgPlaybook.Server.Startup

{

public class OwinStartup

{

public void Configuration(IAppBuilder app)

{

app.UseOAuthAuthorizationServer(new MyOAuthOptions());

app.UseJwtBearerAuthentication(new MyJwtOptions());

}

}

}

Let’s talk about the two custom classes in the startup code – MyOAuthOptions (which helps in issuing tokens) and MyJwtOptions (which helps validate tokens).

The devil in the details starts with MyOAuthOptions, which is a wrapper for the OAuth server options.

public class MyOAuthOptions : OAuthAuthorizationServerOptions

{

public MyOAuthOptions()

{

TokenEndpointPath = "/token";

AccessTokenExpireTimeSpan = TimeSpan.FromMinutes(60);

AccessTokenFormat = new MyJwtFormat();

Provider = new MyOAuthProvider();

#if DEBUG

AllowInsecureHttp = true;

#endif

}

}

Here we setup the server to listen at /token, just like the token middleware in an out of the box Web API project with authentication.

The AccessTokenFormat property references an object implementing ISecureDataFormat<AuthenticationTicket>, which in this case is our own MyJwtFormat. The purpose of this class is to encode and sign information about an authenticated user into a string.

An ISecureDataFormat<T> object has a Protect method which does all the heavy lifting. The AuthenticationTicket object given to this method is constructed from claims put together by the server when a user successfully authenticates, we’ll look at this later.

public class MyJwtFormat: ISecureDataFormat<AuthenticationTicket>

{

private readonly OAuthAuthorizationServerOptions _options;

public MyJwtFormat(OAuthAuthorizationServerOptions options)

{

_options = options;

}

public string SignatureAlgorithm

{

get { return "http://www.w3.org/2001/04/xmldsig-more#hmac-sha256"; }

}

public string DigestAlgorithm

{

get { return "http://www.w3.org/2001/04/xmlenc#sha256"; }

}

public string Protect(AuthenticationTicket data)

{

if (data == null) throw new ArgumentNullException("data");

var issuer = "localhost";

var audience = "all";

var key = Convert.FromBase64String("UHxNtYMRYwvfpO1dS5pWLKL0M2DgOj40EbN4SoBWgfc");

var now = DateTime.UtcNow;

var expires = now.AddMinutes(_options.AccessTokenExpireTimeSpan.TotalMinutes);

var signingCredentials = new SigningCredentials(

new InMemorySymmetricSecurityKey(key),

SignatureAlgorithm,

DigestAlgorithm);

var token = new JwtSecurityToken(issuer, audience, data.Identity.Claims,

now, expires, signingCredentials);

return new JwtSecurityTokenHandler().WriteToken(token);

}

public AuthenticationTicket Unprotect(string protectedText)

{

throw new NotImplementedException();

}

}

Of course many of these details, like the audience, issuer, expiration time, and the key, you’ll want to keep in a configuration file. Also, instead of using InMemorySymmetricSecurityKey, you might want to use a certificate and X509SecurityKey.

Note the OAuth server never uses the Unprotect method. You’d think Unprotect might come in useful when verifying a token, but that’s just not how these components work.

Now, back to how a user is actually authenticated and given claims that are encrypted into the token. That’s the responsibility of the OAuth provider that plugs into the OAuth server.

public class MyOAuthProvider : OAuthAuthorizationServerProvider

{

public override Task GrantResourceOwnerCredentials(OAuthGrantResourceOwnerCredentialsContext context)

{

var identity = new ClaimsIdentity("otc");

var username = context.OwinContext.Get<string>("otc:username");

identity.AddClaim(new Claim("http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name", username));

identity.AddClaim(new Claim("http://schemas.microsoft.com/ws/2008/06/identity/claims/role", "user"));

context.Validated(identity);

return Task.FromResult(0);

}

public override Task ValidateClientAuthentication(OAuthValidateClientAuthenticationContext context)

{

try

{

var username = context.Parameters["username"];

var password = context.Parameters["password"];

if (username == password)

{

context.OwinContext.Set("otc:username", username);

context.Validated();

}

else

{

context.SetError("Invalid credentials");

context.Rejected();

}

}

catch

{

context.SetError("Server error");

context.Rejected();

}

return Task.FromResult(0);

}

}

The ValidateClientAuthentication method is the place where you’ll make calls to a database or membership system to determine if a user is providing the correct credentials. If so, the method will add data into the OWIN request context for GrantResourceOwnerCredentials to pick up and place into claims (which ultimately become part of the auth ticket and serialized into the JWT). The code above only simulates validation by granting access to any user whose password is the same as their username.

The above code is all you’ll need to grant tokens. We also wanted middleware in the application to verify incoming tokens, and back in the beginning of the post we setup UseJwtBearerAuthentication using the following options class.

public class MyJwtOptions : JwtBearerAuthenticationOptions

{

public MyJwtOptions()

{

var issuer = "localhost";

var audience = "all";

var key = Convert.FromBase64String("UHxNtYMRYwvfpO1dS5pWLKL0M2DgOj40EbN4SoBWgfc");;

AllowedAudiences = new[] { audience };

IssuerSecurityTokenProviders = new[]

{

new SymmetricKeyIssuerSecurityTokenProvider(issuer, key)

};

}

}

You’ll need the issuer, audience, and most importantly the key to matchup with the values provided by the JWT formatter we looked at earlier.

To get a token with the above code in place, a client needs to POST form encoded credentials to the /token endpoint. Be sure to include a grant_type of password.

POST /login HTTP/1.1 Host: localhost:17648 Content-Length: 51 Accept: application/json, text/plain, */* Origin: http://localhost:17648 User-Agent: Mozilla/5.0 ... Content-Type: application/x-www-form-urlencoded username=sallen&password=sallen&grant_type=password

If the credentials are correct, the server will respond with a JSON payload that includes the access_token.

{"access_token":"eiJ9............AkUg","token_type":"bearer","expires_in":119}

Subsequent requests just need to include the token in an Authorization header.

GET /api/secret HTTP/1.1 Host: localhost:17648 Accept: application/json, text/plain, */* Authorization: Bearer eiJ9............AkUg

And now you can protect API and MVC controllers with the usual Authorize attribute.

[Authorize]

public class SecretController : ApiController

{

public IHttpActionResult Get()

{

return Ok(new ModelThing());

}

}

If you were to decode the token, you’d see it consist of a header and a payload that look like the following.

{"typ":"JWT","alg":"HS256"}

{"unique_name":"sallen","role":"user","iss":"localhost",

"aud":"all","exp":1421127827,"nbf":1421127707}

Which demonstrates one of the features of JWT – all the claims are in the payload. Once the server decodes and verifies the information inside the token, the server has all the information it needs about a user.

There is a fair amount of code required to setup a JWT OAuth server with the ASP.NET middleware components, but I hope this code might give you a starting point to experiment with.

The addition of iterators in ES6 opens up a new world of algorithms and abilities for JavaScript. An iterator is an object that allows code to traverse a sequence of objects. The sequence of objects could be the values inside an array, or values in a more sophisticated data structure like a tree or map. One of the many beautiful characteristics of iterators is how the iterator doesn’t need to know any details about the underlying collection. All an iterator can do is provide the ability to move through a sequence of items one by one and visit each item inside.

In this post we will look at some of the basic characteristics of an iterator, but a series of future posts will build on this to show both high-level features and some advanced techniques you can use with iterators, or to build your own iterators.

In ES6, array objects will have a few new methods available to work with iterators, and one of these methods is the values method. The values method returns an iterator object that can visit each value in an array.

let sum = 0;

let numbers = [1,2,3,4];

let iterator = numbers.values();

let next = iterator.next();

while(!next.done) {

sum += next.value;

next = iterator.next();

}

expect(sum).toBe(10);

An iterator is an object with a next method. Each time the program invokes the next method, the method returns an object with two properties: value and done. The value property represents the next item in the sequence being iterated. The done property is a flag holding the value false if there are more items to iterate through, or the value true if iteration has passed the final item and is complete.

Iterators exist in many other languages, and in some environments, making changes to a sequence during iteration will result in a runtime exception. In JavaScript, changes do not guarantee an error or exception, but you’d still want to exercise caution, because the changes can certainly create confusion. The following code will change the underlying array in the 2nd pass of the while loop. The changes include pushing the value 5 to the end of the array, and unshifting the value 1 to the beginning of the array. The iterator will see the values 1, 2, 2, 3, 4, 5.

let count = 0;

let sum = 0;

let numbers = [1,2,3,4];

let iterator = numbers.values();

let next = iterator.next();

while(!next.done) {

if(++count == 2) {

numbers.push(5);

numbers.unshift(1);

}

sum += next.value;

next = iterator.next();

}

expect(sum).toBe(17);

Next up: using iterators the easy way with the new for of.

The year 2015 starts and I’m working on a code generator, which is one of the more enjoyable tasks in software for me. Code generators and meta programming requires considerably more mental focus than banging out business code, the kind of focus where any interruption is tantamount to dumping the Atlantic Ocean on a continental scale forest fire. It also means the interrupter is subject to an assortment of growls, stares, and verbal assaults, and for these I apologize.

The are a couple specific challenges this project, but the general challenge in building code generation software is in building a model that can serve two masters. On one hand, the model has to make the actual generation phase easier. On the other hand, the model has to be easy to build from some source of data, be it a visual designer or, in my case, XML. Not just any XML, but XML of debilitating complexity as one critic puts it, and I agree. There are times when I suspect someone has willfully obfuscated the XML, but then I remember Hanlon’s razor (with a twist):

Never attribute to malice that which is adequately explained by stupidity, or is the output of a standards committee.

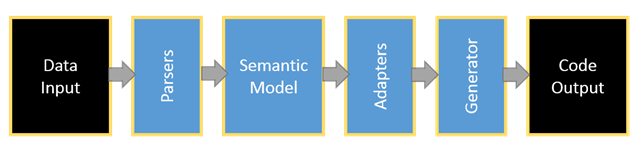

Regardless of the data source, I’ve been to the codegen rodeo before, and the code usually falls into place like the following:

Adapters have to surround the model to make it usable from both ends. In this scenario there are parsers to transform XML into meaningful data structures, and adapters to facilitate the code generation. Ironically, this has been done before as open source, but the output is JavaScript. JavaScript and it’s various server-side runtimes have some strengths, but algorithmic number crunching and set sorting at scale is not one of the those.

I’m replacing JavaScript code then, with something I hope is better. The irony is how I spent 2014 writing more JavaScript code than I have in the previous 15 years, on the server but mostly on the client, and I needed a break. A problem with real teeth. It’s not that front end development doesn’t have any challenges, but I’ve been frustrated by the irresponsible amounts of accidental complexity in front end development.

Ah, you must be talking about the complex framework by the name of Angular.

Well, no, but let’s use Angular as an example. It is, truthfully, a simple framework when you discard all the permutations offered and stick to simple, disciplined, and calculated practices. Projects go haywire for any number of reasons, here are three examples I’ve seen in just the last week.

1) Projects that treat Angular modules as CommonJS or AMD modules, in the sense that every little component needs a dedicated module. With Angular this practice only creates more work and bookkeeping for little gain.

2) The confusion between module.factory and module.service. I always try to tell people to think of factory and service as registration APIs only. To the consumer, it doesn’t matter which one you choose, they both create a service component. When people tell me they are passing a factory to their controller, I get confused. The factory API registers a factory function, but the function is not what the injector will pass to a controller, the injector will pass the output of the method, which is the service.

Taking a step back, both of these problems stem from one of the “two hard things”:

There are only two hard things in Computer Science: cache invalidation and naming things.

-- Phil Karlton

And that’s something I won’t escape from. Naming things is hard on the frontend, but also here on the backend, too.

I’m a fan of the PageObject pattern when writing Protractor tests, because PageObjects make the test code more expressive and readable. When I sit down to write some Protractor tests, I usually write the test code I want to see first, and then try to make it happen.

For example, when writing some tests for a “login and redirect” scenario, I started off with some test specs like the following.

describe("The security application", function () {

var secret = new SecretPage();

var login = new LoginPage();

it("should redirect to login page if trying to view a secret as anonymous user", function () {

secret.go();

expect(browser.getCurrentUrl()).toBe(LoginPage.url);

});

it("should go back to the secret after a login", function () {

login.login("sallen", "sallen");

expect(browser.getCurrentUrl()).toBe(SecretPage.url);

});

});

These tests require two page objects, the SecretRecipePage and the LoginPage. SInce Protractor tests run in Node, each page can live in a distinct module. First, the SecretRecipePage.

var config = require("./config");

var SecretPage = function () {

this.go = function() {

browser.get(SecretPage.url);

};

};

SecretPage.url = config.baseUrl + "security/shell.html#/secret";

module.exports = SecretPage;

And the LoginPage.

var config = require("./config");

var LoginPage = function () {

this.go = function() {

browser.get(LoginPage.url);

};

this.login = function(username, password) {

$(".container [name=username]").sendKeys(username);

$(".container [name=password]").sendKeys(password);

$(".container [name=loginForm]").submit();

};

};

LoginPage.url = config.baseUrl + "security/shell.html#/login";

module.exports = LoginPage;

Both of these modules depend on a config module to remove hard coded URLs and magic strings. A simple config might look like the following.

var config = {

baseUrl: http://localhost:9000/Apps/

};

module.exports = config;

Now all the spec file needs to do is require the two page modules …...

var SecretPage = require("../pages/SecretPage");

var LoginPage = require("../pages/LoginPage.js");

describe("The security application", function () {

// …...

});

And presto! Readable Protractor tests are passing.

In a previous post we took a first look at arrow functions in ES6. This post will demonstrate another feature of arrow functions, as well as some additional scenarios where you might find arrow functions to be useful.

Every JavaScript programmer knows that the this pointer is a bit slippery. Consider the code in the following class, particularly the code inside of doWork.

class Person {

constructor(name) {

this.name = name;

}

doWork(callback) {

setTimeout(function() {

callback(this.name);

}, 15);

}

}

The anonymous function the doWork method passes into setTimeout will execute in a different context than doWork itself, which means the this reference will change. When the anonymous function passes this.name to a callback, this won’t point to a person object and the following test will fail.

var person = new Person("Scott");

person.doWork(function(result) {

expect(result).toBe("Scott"); // fail!

done();

});

Since developers can never rely on JavaScript’s this reference when asynchronous code and callbacks are in the mix, a common practice is to capture the value of this in a local variable and use the local variable in place of this. It’s common to see the local variable with the name self, or me, or that.

doWork(callback) {

var self = this;

setTimeout(function() {

callback(self.name);

}, 15);

}

The above code will pass, thanks to self capturing the this reference.

If we use an arrow function in doWork, we no longer need to capture the this reference!

doWork(callback) {

setTimeout(() => callback(this.name), 15);

}

The previous test will pass with the above code in place because an arrow function will automatically capture the value of this from its outer, parent function. In other words, the value of this inside an arrow function will always be the same as the value of this in the arrow’s enclosing function. There is no more need to use self or that, and you can nest arrow functions arbitrarily deep to preserve this through a series of asynchronous operations.

If you embrace the arrow function syntax, you might find yourself using the syntax with every few lines of code you write. JavaScript’s affinity for asynchronous operations has created an enormous number of APIs requiring callback functions. However, as JavaScript is a solid functional programming language, more and more synchronous APIs have also appeared with function arguments.

For example, ES5 introduced forEach and map methods on array objects.

let result = [1,2,3,4].map(n => n * 2); expect(result).toEqual([2,4,6,8]); let sum = 0; [1,2,3,4].forEach(n => sum += n); expect(sum).toBe(10);

There are also many libraries like underscore and lodash that use higher order functions to implement filtering, sorting, and grouping of data. With ES5 the code looks like the following.

let characters = [

{ name: "barney", age: 36, blocked: false },

{ name: "fred", age: 40, blocked: true },

{ name: "pebbles", age: 1, blocked: false }

];

let result = _.find(characters, function(character) {

return character.age < 40;

});

With arrow functions, the result calculation becomes more expressive.

let result = _.find(characters, character => character.age < 40);

expect(result.name).toBe("barney");

You can even write a Jasmine test using an arrow function.

it("can work with Jasmine", () => {

var numbers = [1,3,4];

expect(numbers.length).toBe(3);

});

Many of the examples shown in this section, like the examples using forEach, map, and _, all work with the concept of collections. Other feature of ES6, like iterators, will make working with collections noticeably more flexible and reliable. We’ll turn out attention to iterators next.

JavaScript has always been a functional programming language. You can pass functions as arguments to other functions, and invoke a function that returns a function back to you. The ES6 standard improves JavaScript’s functional programming capabilities by offering a new, succinct syntax for creating functions. In addition, ES6 defines new syntax to offer features closely associated with functional programming, features like iterators and lazy evaluation. Let’s start looking at the new capabilities by looking at the arrow function, which will look familiar to anyone who has worked with CoffeeScript or C# code.

An arrow function doesn’t require the function keyword, or curly braces, or a return statement. However, arrow functions do require a new operator, the =>, which looks like an arrow pointing to the right and which gives these expressions their name. To build an arrow function for adding two numbers, one simply has to write the following.

let add = (x,y) => x + y;

On the left-hand side of the arrow is the parameter list for the function. In this example there are two parameters, x and y. To the right of the arrow is the executable code. In this case, the code returns the sum of x and y. You could think of the arrow as pointing the parameters to the code that uses them.

Invoking an arrow function looks no different than invoking a traditional function.

let result = add(3, 5); expect(result).toBe(8);

Arrow functions that use exactly one function parameter do not need to surround the parameter with parenthesis.

let square = x => x * x;

If an arrow function requires zero parameters, or more than one parameter, then the parenthesis are required.

let add = (x,y) => x + y; // required parens let square = x => x * x; // optional parens let compute = () => square(add(5,3)); // required parens let result = compute(); expect(result).toBe(64);

So far, the examples use a single expression to the right of the arrow operator. When using a single expression, curly braces and return statements are not required. The return value of the function will be the value of the expression.

However, an arrow function may contain a more complex block of statements, but this approach does require braces and an explicit return (if the function wants to return a value).

let add = (x,y) => {

var result = x + y;

return result;

};

In the next post of this series, we’ll look at a special quality of arrow functions, and also see how we can use them in some common scenarios.

Continuing from a previous post…

Over the years, there have been various approaches to building class hierarchies in JavaScript. All these approaches worked by stitching together constructor functions and prototype objects and each approach was slightly different from the others.

The ES6 standard allows for a declarative, consistent approach to inheritance using the class and extends keywords. As an example, let’s start with a simple class to represent a person with a name.

class Person {

get name() {

return this._name;

}

set name(newName){

if(newName){

this._name = newName;

}

}

}

var p1 = new Person();

p1.name = "Scott";

expect(p1.name).toBe("Scott");

Now we also need a class to represent an employee who has both a name and a title. Using the extends keyword, we can have an Employee class inherit from the Person class, meaning every object instantiated as an Employee will also have all the methods and properties available for a Person.

class Employee extends Person {

get title() {

return this._title;

}

set title(newTitle) {

this._title = newTitle;

}

}

var e1 = new Employee();

e1.name = "Scott"; // inherited from Person

e1.title = "Developer";

expect(e1.name).toBe("Scott");

expect(e1.title).toBe("Developer");

expect(e1 instanceof Employee).toBe(true);

expect(e1 instanceof Person).toBe(true);

When describing the relationship between Employee and Person we often say Person is a super class of Employee, while Employee is a sub class of Person. We can also say that every employee is a person, and as you can see in the above code, the instanceof check in JavaScript confirms that an object created using the Employee class is also an instance of Person.

While every Employee object will inherit all the state and behavior of a Person, the Employee class also has the ability to override the methods and properties defined by Person. For this example, let’s add a doWork method to every Person.

class Person {

get name() {

return this._name;

}

set name(newName){

if(newName){

this._name = newName;

}

}

doWork() {

return this.name + " works for free";

}

}

var p1 = new Person();

p1.name = "Scott";

expect(p1.doWork()).toBe("Scott works for free");

By default, an Employee will behave the same as a Person.

var e1 = new Employee();

e1.name = "Scott";

e1.title = "Developer";

expect(e1.doWork()).toBe("Scott works for free");

However, in the Employee class we can override the behavior of doWork by providing a new implementation.

class Employee extends Person {

// ...

doWork() {

return this.name + " works for a salary";

}

}

var p1 = new Person();

p1.name = "Scott";

expect(p1.doWork()).toBe("Scott works for free");

var e1 = new Employee();

e1.name = "Scott";

e1.title = "Developer";

expect(e1.doWork()).toBe("Scott works for a salary");

Now, as the tests prove, objects instantiated from the Employee class will behave slightly differently in the doWork method than objects instantiated from the Person class. In object-oriented programming, we call this behavior polymorphism.

There are times when a class will want to invoke behavior in its super class directly, which can be done with the super keyword. For example, the Employee class could implement the doWork method as follows.

doWork() {

return super() + "!";

}

Invoking super will execute the super class method of the same name, so with the above code a passing test would now look like the following.

var e1 = new Employee();

e1.name = "Alex";

e1.title = "Developer";

expect(e1.doWork()).toBe("Alex works for free!");

A class can also refer explicitly to any method in the super class.

doWork() {

return super.doWork() + "!";

}

One common scenario for using super is when inheriting from a class that requires constructor parameters. The following Person class now accepts a name parameter during initialization.

class Person {

constructor(name) {

this._name = name;

}

get name() {

return this._name;

}

}

Now an employee will need both a title and a name during initialization.

class Employee extends Person {

constructor(name, title) {

super(name);

this._title = title;

}

get title() {

return this._title;

}

}

Notice how the Employee constructor method uses super to pass along the name parameter to the Person constructor and reuse the logic inside the super class. What’s interesting about JavaScript compared to other object oriented languages, is how you can choose if and when to call the super class constructor. If I were to pick a style to remain consistent, I’d always make a call to super in a sub class before executing any other logic inside the constructor. This style would keep JavaScript classes consistent with the behavior of other object-oriented languages.

Now that we know what inheritance looks like in ES6, it’s time for a word of caution. Inheritance is a fragile technique to achieve re-use that only works well in a limited number of scenarios. Do a search for “composition over inheritance” to see more details.

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen