Rob just put the M into MVC using Subsonic and a Subsonic MVC Template. The template allows you to go from 0 to functioning application in less time than it takes to listen to a Rob Zombie song. Fantastic work by Rob C, and it leads nicely into the actual question behind this series of posts:

“I have created domain entities like Customer, Order, etc. They come out of repository classes backed by NHibernate. I’m wondering if I send them directly to the view or if I create a ViewModel class to hold data. I’m confused by all the terminology”.

This is a difficult question to answer without looking at the specifics of an application, but what follows is my opinion on the matter.

To me, an entity is a business object that is full of data and behavior. The data probably comes from a database. The behavior comes from code you write to implement “business logic”. Perhaps the business logic is simple validation code, or perhaps the business logic is code that talks to other entities and objects in your business layer to coordinate more complex business goals – like placing an order and running a long business process.

An entity exists to serve the business layer. If the customer asks you to change how the order total displays, you’ll make the change in the UI and not touch any entities. But, if the customer asks you to change how the order total is calculated, you’ll make the change in the business logic and possibly modify the Order entity itself. Since the entity exists to serve the business layer you should assume the business layer owns all entities.

It is reasonable to share these business layer owned entities with the UI layer in many applications. This is what happens if you use Rob’s templates. Here is an excerpt from a template generated controller:

// GET: /Movie/Edit/5 public ActionResult Edit(int id) { var item = _repository.GetByKey(id); return View(item); } // // POST: /Movie/Edit/5 [AcceptVerbs(HttpVerbs.Post)] public ActionResult Edit(Movie item) { if (ModelState.IsValid) { string resultMessage = ""; try { _repository.Update(item); resultMessage = item.DescriptorValue() + " updated!"; } // ... } }

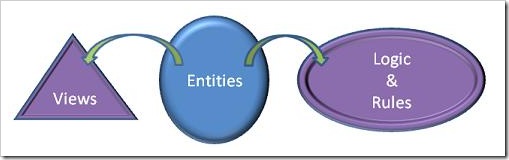

Entities come out of repositories and go directly into a view. During a save operation, the entities materialize from the view and go to a repository. The approach is simple to understand, and works well if you can picture your software like the following:

The entities are round pegs, while the business logic and views are round holes. There is no friction because the entities fit the needs of both layers perfectly. What you are building in these scenarios is a “forms-over-data” application. These types of applications usually have the following characteristics:

I’m not trying to make “forms-over-data” applications sound trivial or stupid. If all you need is a data entry application then you don't want to add unnecessary complexity to your software. Share entities with the UI views and keep things simple. Simple works well.

In the next post we’ll talk about when to avoid sharing entities with your UI layer. As you might guess, it will involve a diagram like this:

Microsoft has discovered, after several well-funded studies, that the names they use for exceptions in the .NET framework create pathological levels of anxiety and stress in programmers. Thus, for .NET 4.0 they decided to create a “kinder and gentler” version of .NET by renaming exceptions in the framework class library.

The changes I know about so far:

Unfortunately, we will need to update and recompile our code for .NET

4.0, but imagine the healthier lifestyle that will result from friendly error messages.

Model is one of those overloaded words in software. Just saying the word model can produce a wide range of expectations depending on the context of the conversation. There are software process models, business process models, maturity models, domain models, security models, life cycle models – the list goes on an on. Nerds love abstractions, so it’s not surprising that we have so many models to choose from.

Let’s talk about a specific model – the model in model-view-controller. What is it? How do you build one? These are the types of questions a developer will ask when working with the ASP.NET MVC framework. As Simone Tokumine points out in his post “.NET MVC vs Ruby on Rails”, there isn’t any direct and specific guidance from Microsoft:

.NET MVC is actually .NET VC. It is an attempt to replicate the Actionpack components of Rails. There is nothing included aside from a folder labeled “Models” to help you with your persistence later and domain modeling.

The scenario isn’t unique to the MVC framework. Microsoft has a long history of leaving the model of an application open to interpretation, and this stance is both a blessing and a curse. A blessing because we can build models to meet the distinctive needs of every application imaginable, and we can use a variety of tools, frameworks, patterns, and methodologies to build those models. However, the curse is that many of us never look outside of the System.Data namespace. Populated DataSets and data readers are the models in many applications, even when they aren’t the best fit.

One reason that DataSets dominate .NET development is because they are featured in the majority of samples and documentation, particularly for ASP.NET Web Forms. In contrast, ASP.NET MVC samples typically feature business objects as models. Even the new MSDN documentation says:

Model objects are the parts of the application that implement the domain logic, also known as business logic.

It’s not surprising then, to see many people using domain objects, business objects, and entities produced by LINQ to SQL or the Entity Framework as model objects.

Do business objects and entities really make the best models?

This is the question I'd like to explore by sharing my opinion and gathering feedback over the course of two more blog posts...

The ASP.NET Connection show in Orlando was a fantastic event. Thanks to everyone who came to a session. Here are the slides and demos for everyone who asked for them.

In the last half of this session we re-factored a dashboard type application with an eye towards using properly abstracted JavaScript code. The dashboard page was loosely based on the code from my Extreme ASP.NET column in the March MSDN Magazine: Charting with ASP.NET and LINQ.

Part of the refactoring process was removing all signs of JavaScript from the .aspx file to achieve the separation of behavior and markup that defines “unobtrusive JavaScript”. If you give JavaScript the focus it deserves, it can love you back.

Other links:

Advanced LINQ Queries and Optimizations

Advanced LINQ Queries and OptimizationsLINQ is inherently more about productivity and expressiveness than performance. We talked about how to avoid unnecessary performance penalties with LINQ, some non-obvious optimizations, and the first optimization you should make - optimizing for readability.

Other links:

A little Blend, a little XAML, a little Visual Studio.

Links:

JavaScript has made some improvements in its “state of the art” over the last several years, despite your best attempts to ignore the language.

Yes, you.

The language hasn’t changed, but the tools, practices, runtimes, and general body of knowledge have all grown and matured. Yes, it’s still a dynamic language, and we all know that you think dynamic languages are more dangerous than a loaded gun, but you can’t ignore the language any longer. JavaScript is everywhere. Why, just the other day I turned on “Who Wants To Be A Millionaire?”, and what did I see?

Here are some signs that you might be behind the times.

1. If you still mix JavaScript and markup …

<head> <script type="text/javascript"> function doStuff() { alert("boo"); } </script> </head> <html> <body onload="doStuff();"> ... </body> </html>

… you should read up about unobtrusive JavaScript. In addition to the performance benefits of keeping script in a .js file that a browser can cache, you also separate your presentation concerns from script behavior and allow yourself to focus on writing better script.

2. If you still use document.getElementById and assign functions to onclick …

var header = document.getElementById("header"); header.onclick = headerClick;

… then you really need to start using one or more JavaScript libraries. There are many great libraries available – just look around. They help isolate you from variations in the browser environments, increase your productivity, and allow you to write more maintainable code.

Other obsolete patterns:

3. Using document.all or document.write

4. Using global variables and global functions

5. Rolling your own browser detection code

6. Debugging with alert messages

Once you learn modern JavaScript idioms and tools, you’ll never look back at these old anti-patterns.

A friend recently had to replace some electrical outlets in her house because they stopped functioning. There was so much corrosion built up between the aluminum wiring and the outlet contacts that the outlets quit working (which is much better than the alternative - catching on fire).

Did you say aluminum wiring?

During the classic rock era of the 1960s and 70s, aluminum wiring became a popular replacement for copper wiring in the USA. Due to a copper shortage, aluminum was cheaper than copper and allowed electrical contractors to lower construction costs. I was quite shocked to hear about aluminum wiring in her home, I’ve only seen copper myself, but according to the CPSC there were ~2 million homes with aluminum wiring by 1974.

Over the years, a number of problems with aluminum began to surface. Aluminum wiring is more brittle than copper, and much more likely to oxidize, corrode, and overheat. Aluminum wiring just isn’t as safe as copper wiring* and is now banned in the electrical codes of many jurisdictions. Aluminum wiring lives on in many houses, however, because it’s expensive to swap out a piece of embedded infrastructure like wiring.

Engineering is always about tradeoffs. But many times we start using a technology, tool, or methodology because it appears to save us time or money. It’s only later that we can see the problems clearly.

My question for you is:

What do you think is the “aluminum wiring” inside today’s software? What have we adopted recently that we’ll look back on in 3 years and say “ouch”.

Here are a few candidates to start the conversation (based on an informal poll of random developers I accosted):

* If you are replacing outlets with aluminum wiring coming in, please, please, please be sure to use an outlet made for aluminum. They are more expensive, and the young clerk at the store will try to sell you an outlet for copper wire, which can be a fire hazard.

When my jQuery code doesn’t work, it usually means I’ve done something terribly wrong with my selectors. Thus, my first rule of debugging code that uses jQuery:

Make sure the selector is actually selecting what you want selected.

I can liberally apply rule number one before fiddling with event handlers and method calls that come later, because it’s easy to do and saves me time.

Step 1: Navigate to the page with a problem and open FireBug.*

Step 2: Type your opening selector in the command bar of the console.

Step 3: Verify the object length is > 0

If you need to take a look at the objects you’ve selected, then a little console logging inside FireBug goes a long way.

$("div > a").each(function() { console.log($(this).text()) })

I hope this tip saves you as much time as it has saved me!

* Technically, any JavaScript execution environment will work, like the Visual Studio immediate window, but the FireBug / Firefox combination is simple and works every time. Another one I like is the jsenv bookmarklet from squarefree.com.

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen