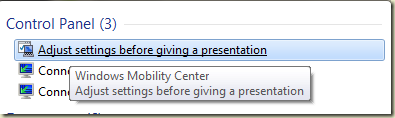

Presentation mode isn’t new (it was introduced in Vista), but it is handy. One easy way to turn presentation mode on is to type “present” into the Start menu search box and let Windows find the “Adjust settings before giving a presentation” item.

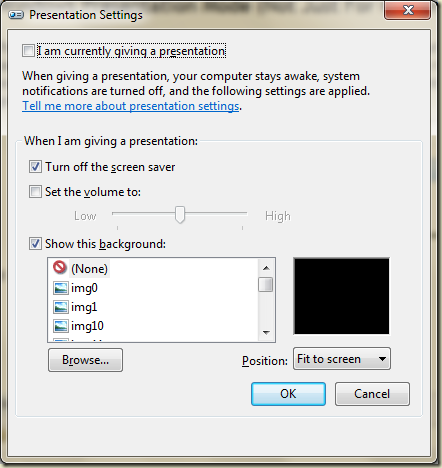

From here you can turn on presentation mode and tweak some settings.

Update: As Jim O’Neil points out, you can also use Windows+X key combo to launch the mobility center and then it’s one click to turn on presentation mode. I learn a new shortcut key everyday…

Notice the help text says “your computer stays awake”. I turned on presentation mode today just to keep my laptop awake while it sat in a corner coping a 16 GB file over WiFi.

Of course, presentation mode is useful when you have an actual presentation, too. In addition to the background, screen saver, and volume settings, Messenger will mark your status as busy and not throw toast on the screen (you’ll only get a blinky icon in the taskbar). Other applications can disable their notifications, too, but the author of the application has to write the code to be aware of notification state. Kirk Evans has an example.

Unfortunately, presentation mode is only available on laptops by default. It turns out you can do a fair amount of presenting from a desktop with Camtasia (love it) and Shared View.

The good news is you can have presentation mode on a desktop with just a couple registry tweaks: http://www.dubuque.k12.ia.us/it/mobilitycenter/

presentationsettings.exe is the name of the executable that displays the dialog shown above. If you want to toggle presentation mode from a script you can use:

presentationsettings /start

and

presentationsettings /stop

Another handy executable is mblctr.exe. This program launches the Windows Mobility Center with a UI to tweak brightness, power settings, presentation mode, and more.

This concludes my exuberant Windows tip of the day, I hope you found it useful.

I’m looking at 5-7 files of data in CSV format. The columns and format can change every 3 to 6 months. What I wanted was a strongly type wrapper / data transfer object for all the CSV file formats I had to work with, and I thought this would be a good opportunity to try a T4 template.

If you haven’t heard of T4 templates before, then it is a technology that is worth your time to investigate. Hanselman has a pile of links in his post T4 (Text Template Transformation Toolkit) Code Generation – Best Kept Visual Studio Secret.

Each CSV file I’m working with has a header row:

PID,CaseID,BirthDate,DischargeDate,Status …

1001,2001,1/1/1970,6/1/2009,Foo …

… and so on for ~ 100 columns.

I wanted a template that could look at every CSV file it finds in the same directory as itself and create a class with a string property for each column. The template would derive the name of the class from the filename. Here’s a starting point:

<#@ template language="c#v3.5" hostspecific="true" #> <#@ import namespace="System.IO" #> <#@ import namespace="System.Collections.Generic" #> using System; namespace Acme.Foo.CsvImport { <# foreach(var fileName in GetCsvFileNames()) { var fields = GetFields(fileName); var className = GenerateClassName(fileName); #> public class <#= className #> : ImportRecord { public <#= className #>(string data) { var fields = data.Split(','); <# for(int i = 0; i < fields.Length; i++) { #> <#= fields[i] #> = (fields[<#= i #>]); <# } #> } <# foreach(var field in fields) { #> public string <#= field #> { get; set; } <# } #> } <# } #> } // end namespace <#+ private string GenerateClassName(string fileName) { return Path.GetFileName(fileName).Substring(0,2) + "_ImportRecord"; } private string[] GetFields(string fileName) { using (var stream = new StreamReader( File.OpenRead(fileName))) { return stream.ReadLine().Split(','); } } private IEnumerable<string> GetCsvFileNames() { var path = Host.ResolvePath(""); return Directory.GetFiles(path, "*.csv"); } #>

The template itself needs some polishing, and the generated code needs some error handling, but this was enough to prove how easy it is to spit out type definitions from a CSV header:

public class AA_ImportRecord { public AA_ImportRecord(string data) { var fields = data.Split(','); ID = fields[0]; CaseID = fields[1]; BirthDate = fields[2]; } public string ID { get; set; } public string CaseID { get; set; } public string BirthDate { get; set; } // ... }

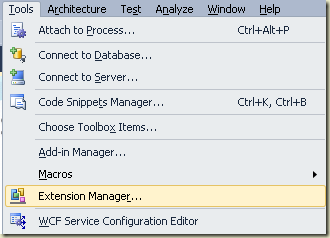

One of the features I think will be a big hit in Visual Studio 2010 is the Extension Manager. As of Beta 2, you can find the Extension Manager under the Tools menu.

The Extension Manager allows you to download and install Visual Studio extensions from Microsoft and other 3rd parties. These extensions can include new controls and project templates for ASP.NET, Silverlight, and WPF, as well as new tools that work both inside and outside of Visual Studio. The first tool I saw when opening the Extension Manager was Gibraltar – a feature rich logging, monitoring, profiler tool recently demoed to me by eSymmetrix’s founder Jay Cincotta.

The Extension Manager is like having the Visual Studio Gallery built into Visual Studio, and saves you the hassle of going to the site, downloading a file, and double-clicking to install (the extension manager automates all this work with some help from web services exposed by the gallery). As a bonus, the manager even understands the dependencies between packages and can ensure you have everything you need for an extension to work. Some of the other extensions you’ll find on the site for 2010 include:

Visual Studio 2010 is the most extensible version of Visual Studio yet, and the Extension Manager will really highlight this fact. But, could it be even better?

Yes it could!

It’s painful to get setup with everyday development tools. It’s also frustrating to see how quickly developers on other platforms can get up and running. Package managers in general and RubyGems in particular make setup easy in an open source environment where fragmentation and spontaneity supposedly ruin a good integration story.

I realize the following scenario has some issues (like licensing issues), but in an ideal world, I’d be able to open Visual Studio and tell it I want the following:

… and so on. When I come back from lunch everything is downloaded, installed, and ready to go.

Three weeks later I might get a notification that a new ASP.NET MVC Preview is available. I’d like to punch a button in Visual Studio to pull down the latest instead of poking around on CodePlex where downloads are hard to find.

Tools like the Extension Manager and Web Platform Installer are getting us closer, but getting setup and staying up-to-date is still too big of a drain on productivity.

Seven years ago, Robin Sharp divided the lifecycle of a programming language into 7 phases:

Conception

Adoption

Acceptance

Maturation

Inefficiency

Deprecation

Decay

I think Robin is correct. Once a language becomes mainstream and reaches the “acceptance” phase, it’s only a matter of time till it becomes inefficient. This is because language designers face a dilemma:

… or …

Both directions have drawbacks.

In the former case, the language doesn’t keep up with the industry. Since the language is not “state of the art”, it only adds friction and inefficiency to the craft of building software applications.

In the latter case, the language is constantly evolving to meet the state of the art, but also collecting baggage and extra weight along the way as practices fall out of fashion. Eventually the language is so large and complex it again carries an inherent inefficiency.

Since its inception, we’ve seen the addition of generics, lambdas, and dynamic programming features to C#. These are all welcome additions for me, and make the language better.

At the same time we’ve seen the rise of new paradigms for functional and multi-threaded programming. I have to wonder if keywords like lock, volatile, and delegate are out of fashion, like skinny leather ties that only clutter up a tie rack.

Do the new features in C# 4.0, like the dynamic keyword, push the language solidly into the maturation phase? Or … after 10 years … are we passed the maturation tipping point where C# can only descend towards inefficiency?

What do you think?

Some projects use a container like StructureMap to completely replace MVC’s DefaultControllerFactory. They do by registering all controllers by name using StructureMap’s scanning feature during application startup.

ObjectFactory.Initialize(x => x.Scan(s => { s.AssembliesFromPath("bin"); s.AddAllTypesOf<IController>() .NameBy(type => type.Name.Replace("Controller", "")); }));

A simple controller factory to make this work would look like this:

public class SimpleControllerFactory : IControllerFactory { public IController CreateController( RequestContext requestContext, string controllerName) { return ObjectFactory.GetNamedInstance <IController>(controllerName); } // ... }

As I mentioned in a previous post, using Areas in MVC 2 means you have the potential for multiple controllers with the same name. A Home controller in the parent project, and a Home controller in each sub-project, for example. Now the simple approach we are using in the code above breaks down. We could do some work to make sure we are registering and looking up types using the proper namespaces, but the logic to look at the namespace constraints is already embedded in MVC’s DefaultControllerFactory.

An easier approach is to use the DefaultControllerFactory to lookup controller types and only use StructureMap to instantiate the controller and resolve dependencies. Doing so means we don’t need to scan any assemblies. StructureMap (and most IoC frameworks) are capable of instantiating an unregistered type as long as the type is concrete. All we need to do is derive from DefaultControllerFactory and override the GetControllerInstance method.

public class BetterControllerFactory : DefaultControllerFactory { protected override IController GetControllerInstance( RequestContext requestContext, Type controllerType) { IController result = null; if (controllerType != null) { result = ObjectFactory.GetInstance(controllerType) as IController; } return result; } }

Moral of the story: Don’t automatically throw away the DefaultControllerFactory. You may find it has some conventions you can make use of!

With apologies to Robert Frost.

Hollywood hasn’t painted a flattering picture for artificially intelligent robots.

Isaac Asimov and Will Smith showed us how three simple laws could go wrong in I, Robot.

And decades earlier we had HAL. HAL wasn’t very nice to Dave.

It was Skynet that really drove the point home. Artificially intelligent robots are more of a threat to the human race than carbon dioxide and swine flu put together.

Geek dad John Baichtal has me thinking that humans with real intelligence and ingenuity wreak enough havoc all by themselves. John pointed to a research paper from the University of Washington that looks at the vulnerabilities of connected robots.

Vulnerability Example:

Usernames and passwords used to access and control the robots are not encrypted, except in the case of the Spykee, which only encrypts them when sent over the Internet. A malicious person could potentially intercept these to gain control of and access to the robots.

From the conclusions:

Household robots have different types of risks than traditional computers. With traditional computers, third-parties can try to get your financial information or destroy your files. With current and future household robots, third-parties can have eyes, ears, and “hands” in your home.

Currently you can buy a one day DDoS atttack for $30. In the future I could see the black market charging $30 to “terrorize a family of 4 in Yeehaw Junction, FL with their networked Roomba”.

Keith Dahlby started a discussion with his post “Is Functional Abstraction Too Clever?” Read Keith’s post for the background, but I would agree with all the comments I’ve read so far and I say the functional approach is a good solution to the problem.

Keith’s post did get me thinking of things that could go wrong in the hands of a developer who doesn’t understand how in-memory LINQ is working.

As an example, let’s use Keith’s Values extension method …

public static IEnumerable<int> Values( this Random random, int minValue, int maxValue) { while (true) yield return random.Next(minValue, maxValue); }

… and let’s say a developer is using the above to satisfy the following requirements.

What’s wrong with the following code?

var values = new Random().Values(1, 100) .Distinct() .OrderBy(value => value) .Take(10) .ToArray();

Do you think it’s a subtle problem?

What’s an easy fix?

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen