Fast forward to 2004. Everyone is obsessed with building “secure” software. Some say we can never be secure with Microsoft technologies.

If we look at what has happened over the last five years, perhaps we can see what might happen in the next five.

1) For scalability, expectations have leveled. In 1999 everyone believed they were building the next eBay and Amazon. Today, nobody is over-hyping the ability to go from 0 to huge overnight. I don’t foresee the same leveling off of expectations for secure software. If anything, the expectation is just starting to grow.

2) Security, interoperability, and flexibility have pushed scalability off the radar screen. On Aaron Skonnard's list, scalability is not even in the top 5 qualities to look for in enterprise software. Most shops with limited resources have to carefully select the qualities to focus on - we can’t have it all. Looking ahead, security and interoperability are here to stay for the next five years.

3) Scalability is easier today because of the platform and runtime. We moved from building web pages with loosely-typed interpreted pages to strongly-typed compiled pages. In some ways, the underlying platform lost features that were abused (think of ADO and server side cursors). Security in the next five years has to get easier too. It is currently hard to run as a non-admin. It is currently hard to run assemblies without full-trust. The platform and runtime can get better in this area, but not make them completely painless.

4) Scalability is easier because of the tools. It’s easy to set performance benchmarks and measure scalability with software. It’s easy to catch possible bottlenecks with syntactic analysis of an application: a cursor declaration in a SQL query, a static constructor in a class. Many security problems require the tougher task of semantic analysis. Where is the application saving a plaintext password? Is this string concatenation putting user input into a SQL string? These will be tough problems to uncover. Additional expertise and heuristics will need to exist in security tools.

5) Scalability education has been constant over the last five years. Follow some guidelines and work with the framework instead of against it, and you’ll have a decently scalable system. Don’t write your own connection pooling and thread pooling libraries – chances are the ones in place will work for 95% of the applications being developed. Security education, on the other hand, is still in infancy. You’ll find a good example on any given day in the newsgroups. Someone will post a solution which opens and disposes a database connection in an efficient manner, but somewhere in between concatenates a textbox value into a SQL string. This education takes some time.

In five more years I think we will be better off, but not completely there. We moved from being obsessed with scalability to expecting it out of the box in five years. However, after five more years we will still be obsessed with writing secure software, because security will still be difficult. As they say on Wall Street, past performance is not indicative of future returns.

Any problem in computer science can be solved with another layer of indirection. – Butler Lampson

Since the dawn of the HttpModule class in .NET 1.0, we’ve seen an explosion of URL rewriting in ASP.NET applications. Before HttpModule, URL rewriting required an ISAPI filter written in C++, but HttpModule brought the technique to the masses.

URL rewriting allows an application to target a different internal resource then the one specified in the incoming URI. While the browser may say /vroot/foo/bar.aspx in the address bar, your application may process the request with the /vroot/bat.aspx page. The foo directory and the bar.aspx page may not even exist. Most of the directories and pages you see in the address bar when viewing a .Text blog do not physically exist.

From an implementation perspective, URL rewriting seems simple:

If you want a comprehensive article about implementing URL rewriting and why, see Scott Mitchell’s article: “URL Rewriting in ASP.NET”, although I believe there is a simpler solution to the post-back problem (see the end of this post). I’m still looking for an ASP.NET topic on which Scott Mitchell has not written an article. If anyone finds one, let me know. Scott’s a great writer.

Conceptually, URL rewriting is a layer of indirection at a critical stage in the processing pipeline. It allows us to separate the view of our web application from it’s physical implementation. We can make one ASP.NET web site appear as 10 distinct sites. We can make one ASPX web form appear as 10,000 forms. We can make perception appear completely different from reality.

URL rewriting is a virtualization technique.

URL rewriting does have some drawbacks. Some applications take a little longer to figure out when they use URL rewriting. When the browser says an error occurred on /vroot/foo/bar.aspx, and there is no file with that name in solution explorer, it’s a bit disconcerting. Like most programs driven by meta-data, more time might be spent in an configuration file or database table learning about what the application is doing instead of in the code. This is not always intuitive, and for maintenance programmers can lead to the behavior seen in Figure 1.

| Figure 1: Maintenance programmer who has been debugging and chained to a desk for months, finally snaps. It's the last time that machine will do any URL rewriting. |  |

Actually, I’m not sure what the behavior in figure 1 is. It might be a Rob Zombie tribute band in Kiss makeup, or some neo-Luddite ritual, but either way it is too scary to look at and analyze.

One important detail about URL rewriting that is often left out. In the destination page, during the Page_Load event, it’s important to use RewritePath again to point to the original URL if your form will POST back to the server. This will not redirect the request again, but it will let ASP.NET properly set the action tag of the page’s form tag to the original URL, which is where you want to post back to.

Context.RewritePath(Path.GetFileName(Request.RawUrl));

Without this line, the illusion shatters, and people rant. I learned this trick from the Community Starter Kit, which is a great example of the virtualization you can achieve with URL rewriting.

John Woods stopped in yesterday to clarify some of the finer points of the nasal demon effect. John has another contribution to the world of knowledge:

"SCSI is *NOT* magic. There are *fundamental technical reasons* why you have to sacrifice a young goat to your SCSI chain every now and then."

The toolkits worked. We moved a tremendous amount of information about mortgages back and forth. Of course, there were some hitches here and there, but nothing we couldn’t figure out after a quick search of soap-user. It was simple – just so plain and simple.

Because of this experience, I have great deal of reservations over all of these WS specifications. I believe if I were to approach that same project today, the experience would involve considerably more pain. Someone would want to use a WS toolkit here and there, and we’d be buried just trying to pass an XML fragment back and forth. It is going to take at least 3 to 5 years to shake out everybody’s interpretation and implementation of the specs to reach a plateau of interoperability, as well as revisions and fine-tuning to the specs themselves. You might say I am a member of the loyal opposition.

Do we need more standards? Yes. Do we need them now? Yes. Amazon could use them. Ted says the WS stacks are overkill for Amazon, but even though they may do millions of web service transactions, these are transactions in the loose sense of the word, not transactions in the cash flow sense of the word, just ask Carl. Everyone seems to agree the specifications are bulky and opaque. What company would offer web services for the masses based on specifications the tool vendors can’t keep up with?

After four years of web service hype, simple is still the only approach that works for everyone. It's going to be a long, slow road. It will give everyone time to mix in practical experience with the thoery of specifications.

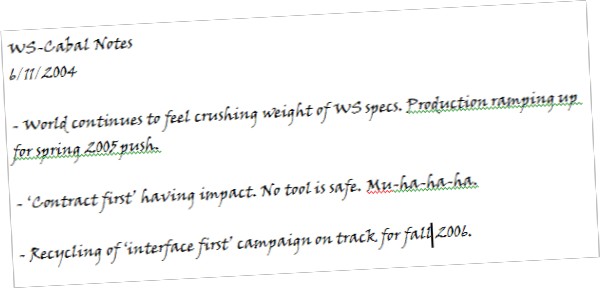

A source entrusted me with the following paper she found at a Starbucks this summer. The hand written notes are proof of a hidden agenda lurking in the world of SOA.

I’m trying to get this to Dan Rather, but he is not returning my phone calls.

Building enterprise scale health care software is a massive effort. Not only do they need the general business functionality of inventory, accounting, and payroll, but there are specialized needs in every corner of the hospital: nursing, radiology, pathology, microbiology, blood bank, admissions, pharmacy, and patient care records, just to name a few. Every one of these areas has specific needs to addresses with software.

I’ll take a wild guess and say that by 2006 this team will have 500 developer-years of work in the project. I know some other established companies in the US market. I’ll make another guess and say they have 5,000 developer-years of work invested in their solutions - but there is a huge difference.

The companies I know work in C++, and they work from scratch. They write their own hardware abstraction layers, their own device drivers, and their own proprietary database formats. It’s easy to burn 5,000 developer-years building software this way, and here is the end result: They give thier customers a custom report building application that requires instructions like the following:

VAL=IF{@account^/R.SEG.TAP %Z.rw.segment("ACT.TAP.cust.first.statement.date.

VAL=",@.db)},/R.SEG.VAL["FIRST"]

That's not from legacy software, that's from the most recent shipping version. It sure keeps the consultants and trainers busy.

On the other hand, if someone builds the software using .NET, an off the shelf relational database engine, third party reporting tools, and lets the a commercial operating system take care of, well, operating the system, they can catch up pretty quickly. At least in theory. It will be interesting to see how far they go by 2010.

I tried to update my Linux distribution this week by starting over on a fresh virtual PC. I tried SuSE 9.1 Personal only to have a kernel panic early in the installation. Then I tried Fedora Core 2 only to have another kernel panic. That’s when I began to suspect a more sinister problem and found this bug entry - complete with a 57 step work around.

Maybe I'll just wait three months and try again.

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen