In a previous post we used a Node.js script to copy a MongoDB collection into Azure Table Storage. The program flow is hard to follow because the asynch APIs of both drivers require us to butcher the procedural flow of steps into callback functions. For example, consider the simple case of executing three procedural steps:

In code, the steps aren’t as straightforward:

var mongo = require('mongodb').MongoClient;

var main = function(){

mongo.connect('mongodb://localhost', mongoConnected);

};

var mongoConnected = function(error, db){

if(error) console.dir(error);

db.close();

console.log('Finished!');

};

main();

Some amount of mental effort is required to see that the mongoConnected function is a continuation of main. Callbacks are painful.

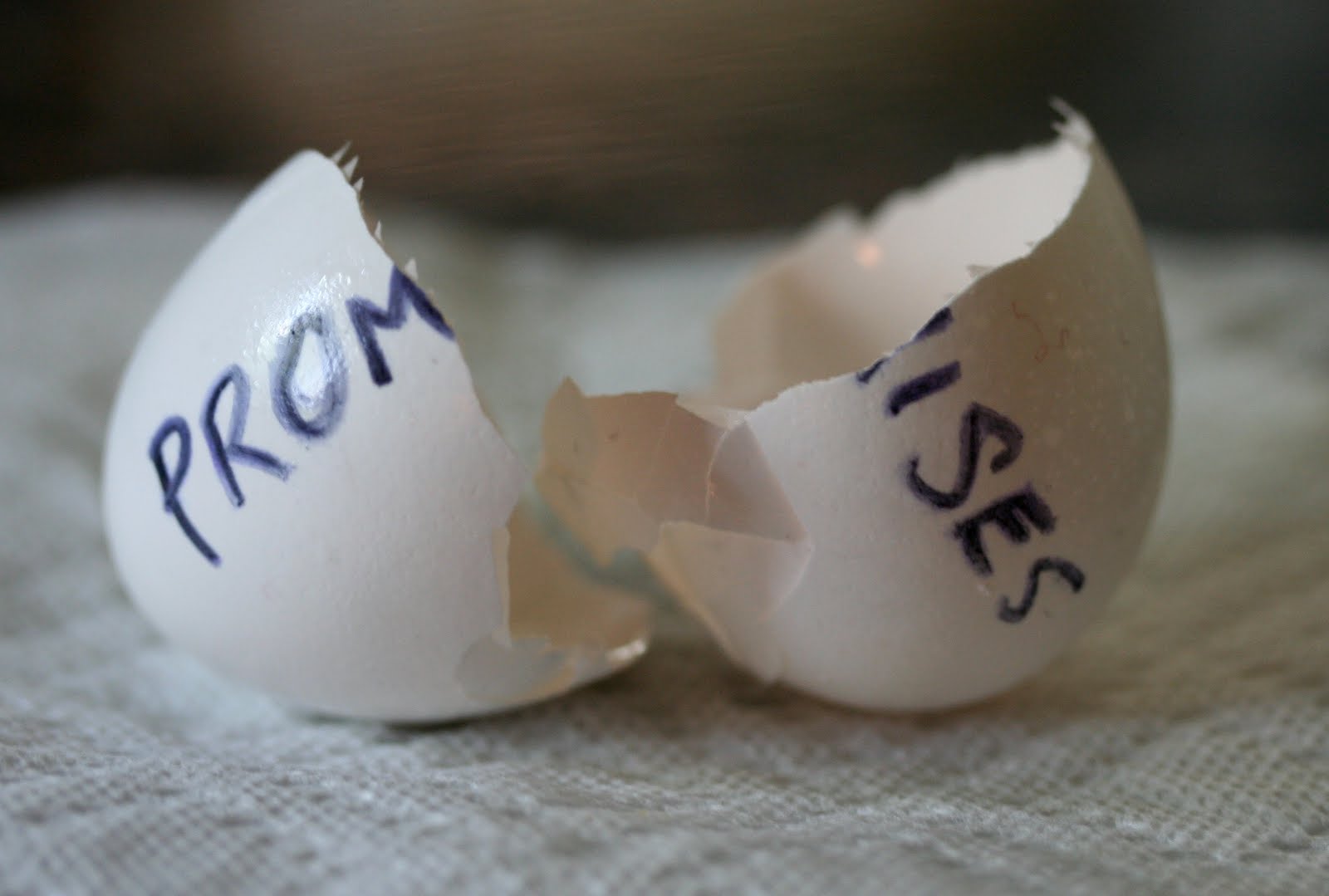

When anyone complains about the pain of callback functions, the usual recommendation is to use promises. Promises have been around for a number of years and there are a variety of promise libraries available for both Node and client scripting environments, with Q being a popular package for node.

When anyone complains about the pain of callback functions, the usual recommendation is to use promises. Promises have been around for a number of years and there are a variety of promise libraries available for both Node and client scripting environments, with Q being a popular package for node.

Promises are useful, particularly when they destroy a pyramid of doom. But promises can’t un-butcher the code sliced into functions to meet the demands of an asynch API. Also, promises require shims to transform a function expecting a callback into a function returning a promise, which is what Q.nfcall will do in the following code.

var Q = require('Q');

var mongo = require('mongodb').MongoClient;

var main = function(){

Q.nfcall(mongo.connect, 'mongodb://localhost2')

.then(mongoConnected)

.catch(function(error){

console.dir(error);

});

};

var mongoConnected = function(db){

db.close();

console.log('Finished!');

};

main();

I’d say promises don’t improve the read-ability or write-ability of the code presented so far (though we can debate the usefulness if multiple calls to then are required). This is the current state of JavaScript, but JavaScript is evolving.

ECMAScript 6 (a.k.a Harmony) introduces the yield keyword. Anyone with some programming experience in C# or Python (and a number of other languages, except Ruby) will already be familiar with how yield can suspend execution of a function and return control (and a value) to the caller. At some later point, execution can return to the point just after yield occurred. In ES6, functions using the yield keyword are known as generator functions and have a special syntax (function*), as the following code demonstrates.

"use strict";

var numberGenerator = function*(){

yield 1;

yield 2;

console.log("About to go to 10");

yield 10;

};

for(let i of numberGenerator()){

console.log(i);

};

A couple of other notes about the above code:

The code will print:

1 2 About to go to 10 10

Instead of using for-of, a low level approach to working with generator functions is to work with the iterator they return. The following code will produce the same output.

let sequence = numberGenerator();

let result = sequence.next();

while(!result.done){

console.log(result.value);

result = sequence.next();

}

However, what is more interesting about working with iterator objects at this level is how you can pass a value to the next method, and the value will become the result of the last yield expression inside the generator. The ability to modify the internal state of the generator is fascinating. Consider the following code, which generates 1, 2, and 20.

var numberGenerator = function*(){

let result = yield 1;

result = yield 2 * result;

result = yield 10 * result;

};

let sequence = numberGenerator();

let result = sequence.next();

while(!result.done){

console.log(result.value);

result = sequence.next(result.value);

}

The yield keyword has interesting semantics because not only does the word itself imply the giving up of control (as in ‘I yield to temptation’), but also the production of some value (‘My tree will yield fruit’), and now we also have the ability to communicate back into the yield expression to produce a value from the caller.

Imagine then using yield in a generator function to produce promise objects. The generator function can yield one or more promises and suspend execution to let the caller wait for the resolution of the promise. The caller can iterate over multiple promises from the generator and process each one in turn to push a result into the yield expressions. What would it take to turn a dream into a reality?

It turns out that Q already includes an API for working with Harmony generator functions, specifically the the spawn method will immediately execute a generator. The spawn method allows us to un-butcher the code with three simple steps.

"use strict";

var Q = require('Q');

var mongo = require('mongodb').MongoClient;

var main = function*(){

try {

var db = yield Q.nfcall(mongo.connect, 'mongodb://localhost');

db.close();

console.log("Finished!");

}

catch(error) {

console.dir(error);

}

};

Q.spawn(main);

Not only does spawn un-butcher the code, but it also allows for simpler error handling as a try catch can now surround all the statements of interest. To gain a deeper understanding of spawn you can write your own. The following code is simple and makes two assumptions. First, it assumes there are no errors, and secondly it assumes all the yielded objects are promises.

var spawn = function(generator){

var process = function(result){

result.value.then(function(value){

if(!result.done) {

process(sequence.next(value));

}

});

};

let sequence = generator();

let next = sequence.next();

process(next);

};

spawn(main);

While the syntax is better, it is unfortunate that promises aren’t baked into all modules, as the shim syntax is ugly and changes the method signature. Another approach is possible, however.

The suspend module for Node can work with or without promises, but for APIs without promises the solution is rather clever. The resume method can act as a callback factory that will allow suspend to work with yield.

var mongo = require('mongodb').MongoClient;

var suspend = require('suspend');

var resume = suspend.resume;

var main = function*(){

try {

let db = yield mongo.connect('mongodb://localhost', resume());

db.close();

console.log("Finished!");

}

catch(error) {

console.dir(error);

}

};

suspend.run(main);

And now the original ETL script looks like the following.

var azure = require('azure');

var mongo = require('mongodb').MongoClient;

var suspend = require('suspend');

var resume = suspend.resume;

var storageAccount = '...';

var storageAccessKey = '...';

var main = function *() {

let retryOperations = new azure.ExponentialRetryPolicyFilter();

let tableService = azure.createTableService(storageAccount, storageAccessKey)

.withFilter(retryOperations);

yield tableService.createTableIfNotExists("patients", resume());

let db = yield mongo.connect('mongodb://localhost/PatientDb', resume());

let collection = db.collection('Patients');

let patientCursor = collection.find();

patientCursor.each(transformAndInsert(db, tableService));

};

var transformAndInsert = function(db, table){

return function(error, patient){

if (error) throw error;

if (patient) {

transform(patient);

suspend.run(function*() {

yield table.insertEntity('patients', patient, resume());

});

console.dir(patient);

} else {

db.close();

}

};

};

var transform = function(patient){

patient.PartitionKey = '' + (patient.HospitalId || '0');

patient.RowKey = '' + patient._id;

patient.Ailments = JSON.stringify(patient.Ailments);

patient.Medications = JSON.stringify(patient.Medications);

delete patient._id;

};

suspend.run(main);

And this approach I like.

OdeToCode by K. Scott Allen

OdeToCode by K. Scott Allen